Benchmarking Performance of OpenStack Swift Configurations

If you’ve seen OpenStack Swift, you already know it’s an extremely dynamic and flexible project with many ongoing proposals and plans: object encryption, erasure codes, and many other smaller updates in different directions of development abound. The latest and very recent Swift release is 1.9.0 and it features full global clusters support. What that means is an opportunity to distribute one cluster over multiple, geographically dispersed sites, connected through a high-latency network.

To see how performant a new feature really is, you need to use testing tools that can reliably simulate a production workload. There is a great deal of difference between object storage and other production workloads: instead of organizing files into a directory hierarchy, object storage systems store files in an unnested set of containers and use unique tokens to retrieve them. So testing object storage requires special tools—the kind that will work with Swift’s operations and show its behavior under a predefined load. And that’s where the benchmarking tools for object storage—swift-bench and ssbench—come into play. These tools were created by SwiftStack for benchmarking OpenStack Object Storage (Swift).

So with the new release, we need to do a performance evaluation of the newly added features, such as a region tier for data placement, a separate replication network, and read/write affinity.

Evaluating Swift’s new features

Let’s have a quick run through of the new features. A region is a top-level tier in the Swift ring, essentially a grouping of zones. You can read in more detail about its structure and algorithm here. This tier supports geographically distributed clusters, allows replicas to be stored in multiple physical regions, and enables proxy affinity. (In other words, the proxy server should not use a foreign region if a local replica is available).

Benchmarking tools

Performance tests on a single virtual machine (VM) with Swift-All-In-One (SAIO) installed were made using swift-bench. This tool is a part of the Swift project and allows easy benchmarking of the SAIO installation. For testing multinode installations, we use ssbench because it enables you to exercise more specific and controlled workloads. It’s not a part of the Swift project, but rather an open-sourced tool by SwiftStack.

Why we did it

The main point of using ssbench and swift-bench is to research how new updates affect the number of average requests per second served by the testbed Object Storage under a fixed and specific load.

How it’s done

ssbench uses a message queue protocol to control and retrieve results from the servers. The test run scenario is defined in a JSON-formatted file. It can be configured for different types of performance testing: load, stress, stability, and so on.

So, take a predefined scenario:

{

"name": "Medium test scenario",

"sizes": [{ # Describe 3 types of files to upload

"name": "tiny",

"size_min": 1000, # Range of files in bytes

"size_max": 16000

}, {

"name": "small",

"size_min": 100000,

"size_max": 200000

}, {

"name": "medium",

"size_min": 1000000,

"size_max": 2000000

}],

"initial_files": { # Number of files for each type

"tiny": 20,

"small": 20,

"medium": 2

},

"operation_count": 100, # Total number of queries

"crud_profile": [4, 3, 1, 1], # Allocation of queries among CREATE, READ, UPDATE, and DELETE

"user_count": 5,

"container_base": "ssbench",

"container_count": 100,

"container_concurrency": 10

}ssbench supports both v1.0 and v2.0 (Keystone) authentication, so you can use this tool for Swift as an OpenStack component and also for a standalone Swift Object Storage.

The results

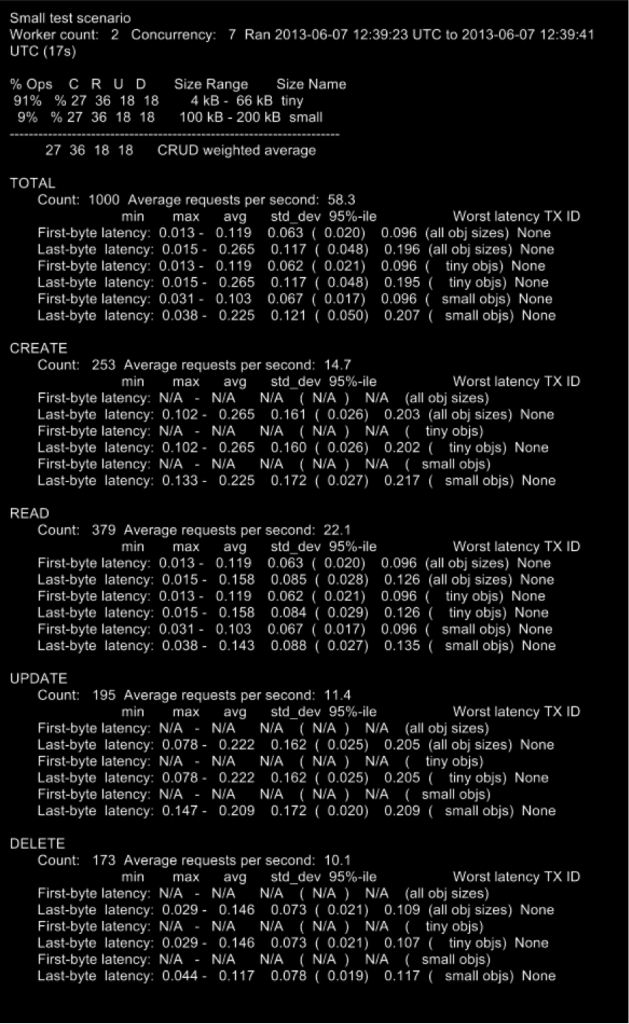

ssbench produces results in two forms. First, you get detailed statistics that contain such information as first-byte and last-byte latency, average requests per second, and the total number of processed requests for each type of request (Create, Read, Update, and Delete). Second, it can create a CSV file with the requests completed per second histogram data. Using these files, you can easily create neatly displayed charts for visual representation of the testing results.

Here’s an example of an ssbench report, gathered on a single node. The VM’s own capacity is:

RAM: 2GB

VCPU: 1

Timing cached reads: 5432.52 MB/sec

Timing buffered disk reads: 57.91 MB/sec

Methodology

Applying these benchmarking tools gives you realistic and relevant dataq that can help you choose the appropriate way of deploying your production environment using all of the advantages of Swift Object Storage.

Rings comparison

Say you want to compare the results of the benchmarks for different sets of regions, devices, and replicas. For that, we’d propose the following scenarios:

- Group four devices in one region, four zones with three replicas.

- Group four devices in one region, two zones with three replicas.

- Group four devices in one region, one zone with three replicas.

- Group four devices in two regions, four zones with two replicas.

You would run tests against different commits in the code repository to observe the performance results before and with the 1.9.0 release.

| commit | name | region | zone | PUTS | GETS | DEL |

| dec517e3497df25cff70f99bd6888739d410d771 | Drop cache after fsync | 1 | 4 | 26.4/s | 107.8 | 32 |

| 13347af64cc976020e343f0fd3767f09e26598de | Merge "Improve swift's keystoneauth ACL support" | 1 | 4 | 35.1 | 116.8 | 32.9 |

| 151313ba8c6612183f6a733edbcbc311b3360949 | master swift 1.9.0 | 1 | 4 | 45.3 | 133.1 | 44.2 |

| 151313ba8c6612183f6a733edbcbc311b3360949 | master swift 1.9.0 | 1 | 2 | 49.1 | 160.3 | 51.3 |

| 151313ba8c6612183f6a733edbcbc311b3360949 | master swift 1.9.0 | 1 | 1 | 42.3 | 175.3 | 40 |

| 151313ba8c6612183f6a733edbcbc311b3360949 | master swift 1.9.0 | 2 | 4 | 54.3 | 109.8 | 49.7 |

Affinity comparison

To compare the results in op/s for the next configuration with affinity enabled and disabled:

- Group four devices in one region, four zones with three replicas.

| Disable | Enable | |

| PUTS | 45,3 | 7,2 |

| GETS | 133,1 | 160,3 |

| DEL | 44.2 | 55,2 |

- Group four devices in one region, two zones with three replicas.

| Disable | Enable | |

| PUTS | 49,1 | 3,8 |

| GETS | 160,3 | 157 |

| DEL | 51,3 | 57,8 |

- Group four devices in one region, one zone with three replicas.

| Disable | Enable | |

| PUTS | 42,3 | 55,3 |

| GETS | 175,3 | 160,3 |

| DEL | 40 | 55,2 |

- Group four devices in two region, four zones with two replicas.

| Disable | Enable | |

| PUTS | 54,3 | 3,4 |

| GETS | 109,8 | 136 |

| DEL | 49,7 | 49 |

As you can see, the score of the PUT request falls, while the score of the GET requests rises. This happens because for write affinity, only one device becomes a local node. When the ring has one predefined region and zone, all nodes are local and we can observe the rise in op/s.

Throughput

Another method for obtaining relevant information for analysis is to compare the results while incrementing the number of workers in ssbench. In our test case, we had every worker started on a separate host and created requests from 12 users:

The idea behind this ramp-up benchmark scenario is to increase the client concurrency step by step at each benchmark run to find the cluster's limit.

Other methodologies

To get a good look at what was really happening, we executed tests on a multinode cluster with 9 devices divided into 3 regions. We started every worker on a separate host and created requests from 20 users. You would master-distribute the benchmark run across all client servers while still coordinating the entire run. The scenario contains the following parameters: 20 users with 100 containers requested, with 100 files of 512 MB.

ID_run | r1-01 | r1-02 | r1-03 | r2-01 | r2-02 | r2-03 | r3-01 | r3-02 | r3-03 |

1 | master | worker | worker | ||||||

2 | master | worker | worker | ||||||

3 | master | worker | worker | ||||||

4 | master | worker | worker | ||||||

5 | master | worker | worker | worker | |||||

6 | master | worker | worker | worker | |||||

7 | master | worker | worker | worker | worker |

We used this division of workers to collect performance results between clusters in different regions.

- In case 1, only a local region is used.

- In cases 2 and 3, the master gathers results from one nonlocal region.

- In cases 2 and 3, the master gathers results from two nonlocal region.

- In cases 5 through 7, we increase the number of workers.

The following table shows the performance with the default configuration for each request in op/s.

ID_run | Create | Update | Delete | Read |

1 | 20.1 | 25.0 | 21.6 | 228.7 |

2 | 22.2 | 23.9 | 21.2 | 240.2 |

3 | 22.8 | 27.7 | 22.1 | 223.7 |

4 | 20.0 | 24.6 | 18.2 | 244.8 |

5 | 21.7 | 20.2 | 22.3 | 249.9 |

6 | 17.4 | 15.6 | 20.8 | 241.2 |

7 | 11.5 | 11.3 | 10.6 | 24.6 |

At step 6 with 4 workers, we achieve the cluster limit, as seen by the fact that the number of operations per second begins to decline.

Summary

This research allows us only one conclusion: using Swift release 1.9.0 is a step forward in raising the performance of your data storage.

This is just one example of using benchmarking tools. At every turn of new feature development, our research team jumps in and creates new kinds of testing methodologies to ensure product quality.

To set up your own testing environment, or get more info about the tools we used, check out these key links:

- ssbench GitHub: https://github.com/swiftstack/ssbench

- ssbench SwiftStack blog: http://swiftstack.com/blog/2013/04/18/openstack-summit-benchmarking-swift/

- Swift 1.9.0 in blog: http://lists.openstack.org/pipermail/openstack-dev/2013-July/011221.html

- Swift 1.9.0 info: https://launchpad.net/swift/+milestone/1.9.0

Ksenia Demina is a Quality Assurance Engineer with Mirantis' OpenStack Research and Development Team. She’s also working on her master’s degree in Computer Security and Cryptography at Saratov State University in Saratov, Russia.