A new agent management approach for Quantum in OpenStack Grizzly

Quantum was introduced in OpenStack’s Folsom release and brought features such as tenant control and a plugin mechanism that enabled the use of different technologies for managing networks via its API. It empowered users to create more advanced network patterns for connecting their VM instances than Nova allowed. But the more Quantum is used, the more questions about its scalability and reliability arise.

Quantum runs several specific services, called agents, that are backends for processing different kinds of requests and providing different kind of features. For example, the L3 agent handles routing configuration and provides floating IP support. To make a Quantum setup more durable, you need several agents of each type running on different hosts. However, to make that work, the Quantum server must be able to schedule requests properly to avoid conflicts in network configuration.

In Folsom, Quantum didn’t have facilities to schedule its agents between cluster nodes, but the Grizzly release brings the support of a new component management approach, which addresses this issue.

Agents in Quantum for OpenStack Folsom

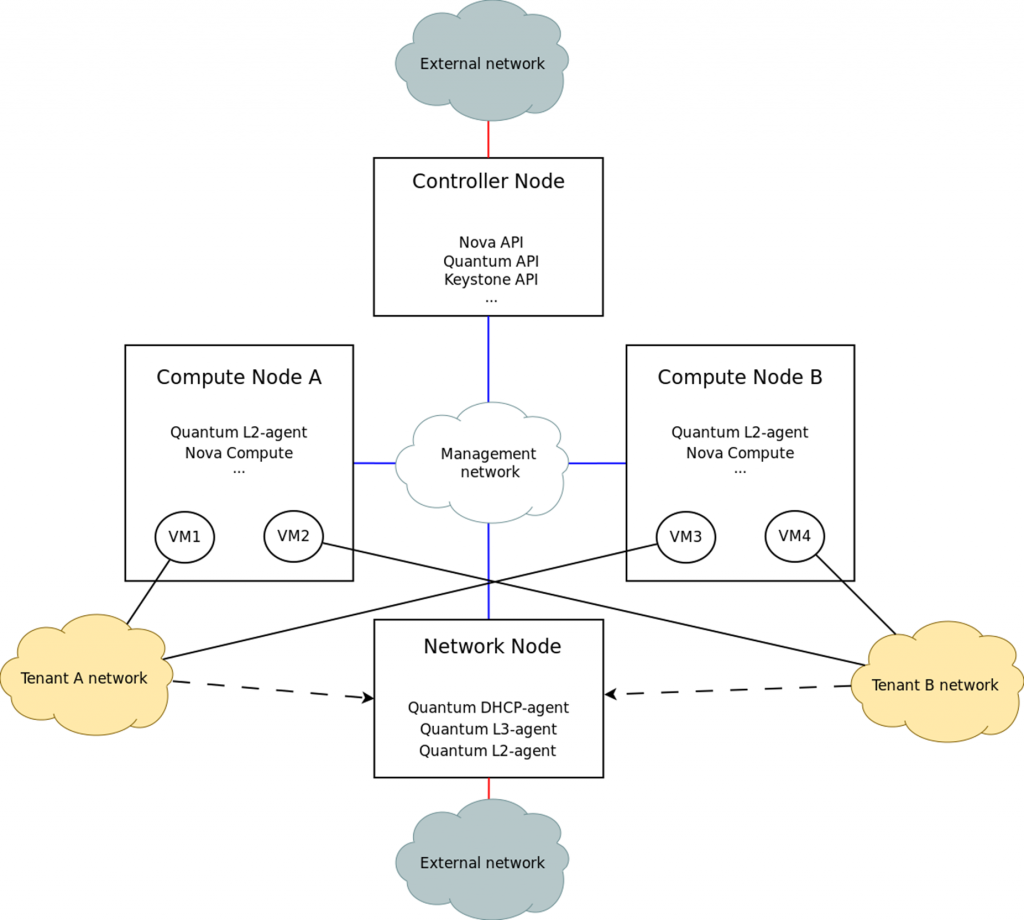

Consider a typical OpenStack Folsom networking setup, as shown in Figure 1.

[caption id="attachment_443344a" align="aligncenter" width="800"]

Figure 1. The typical networking setup in Folsom

This assumes that a cloud provider has created the following types of nodes:

- Controller node(s), which provide APIs for authentication, computing, networking, and other services. At least one node of this type is required. It can be scaled easily by adding nodes.

- Compute node(s), which run VM instances for tenants. At least one node of this type is required. It can be scaled easily by adding nodes as well.

- Network node(s), which provide DHCP and L3-network services—routing and “floating” IPs. At least one node of this type is required, and its scalability is limited by the fact that there is no way to schedule Quantum DHCP-services and routers between different network nodes.

The diagram also shows three different types of networks:

- Management network, which provides connectivity between nodes of the cluster. It is routed only inside a data center.

- Data (tenant) network, which provides connectivity between VM instances of a tenant. It can be used to set up private networks to ensure tenant isolation. The actual technique used for creation of these networks is determined by the chosen Quantum core plugin. Typical OpenStack installations rely upon OpenVSwitch and VLANs or GRE tunnels.

- External network, which provides VM instances with Internet access and a preallocated range of IP addresses that are routed on the Internet (“floating” IPs which can be assigned to VMs later). A cloud provider may also decide to expose cluster OpenStack APIs to this network.

Each compute (and network) node must run a Quantum L2-agent, which provides a base for creation of L2-networks to be used by VM instances. It’s up to the core Quantum plugin to provide a technique for isolation of separate networks. For example Quantum OpenVSwitch plugin makes use of VLANs and GRE tunnels (which basically are “L2-networks over an existing L3-network”).

A network node usually provides a DHCP-server for each of the private networks, which is used by VM instances to receive their network configuration parameters. To provide L3-services such as routing and assigning of “floating” IPs (implemented as SNAT/DNAT IPtables rules), an L3-agent must be running as well.

All of this means that a network node is effectively a Single Point of Failure (SPoF), because the failure of a network node leads to a loss of Internet connectivity, potentially for the entire cluster; VM instances can’t get their network configuration parameters, but even if they could, they would not be able to access the Internet, because SNAT and DNAT are performed on the network node.

To prevent this, one can actually run multiple copies of DHCP-agents on different nodes, or even set up a few distinct network nodes to ensure high availability of L3-services. But this solution has several drawbacks:

- There is no way to schedule a network to be hosted by a specific DHCP-agent or routed via a specific node running an L3-agent.

- There is no way to monitor the activity of existing DHCP- or L3-agents and reschedule hosted (routed) networks to different agents in the case of a failure of the original hosting (routing) agents.

The lack of an agent scheduler also limits the scalability of Quantum services, as there is no way to distribute networks to be hosted (routed) between different nodes running corresponding Quantum agents. For example, if there is only one running L3-agent, then the Internet access bandwidth of the whole cluster is limited by the external network interface bandwidth of the network node.

OpenStack cloud scheduling feature in Quantum for Grizzly

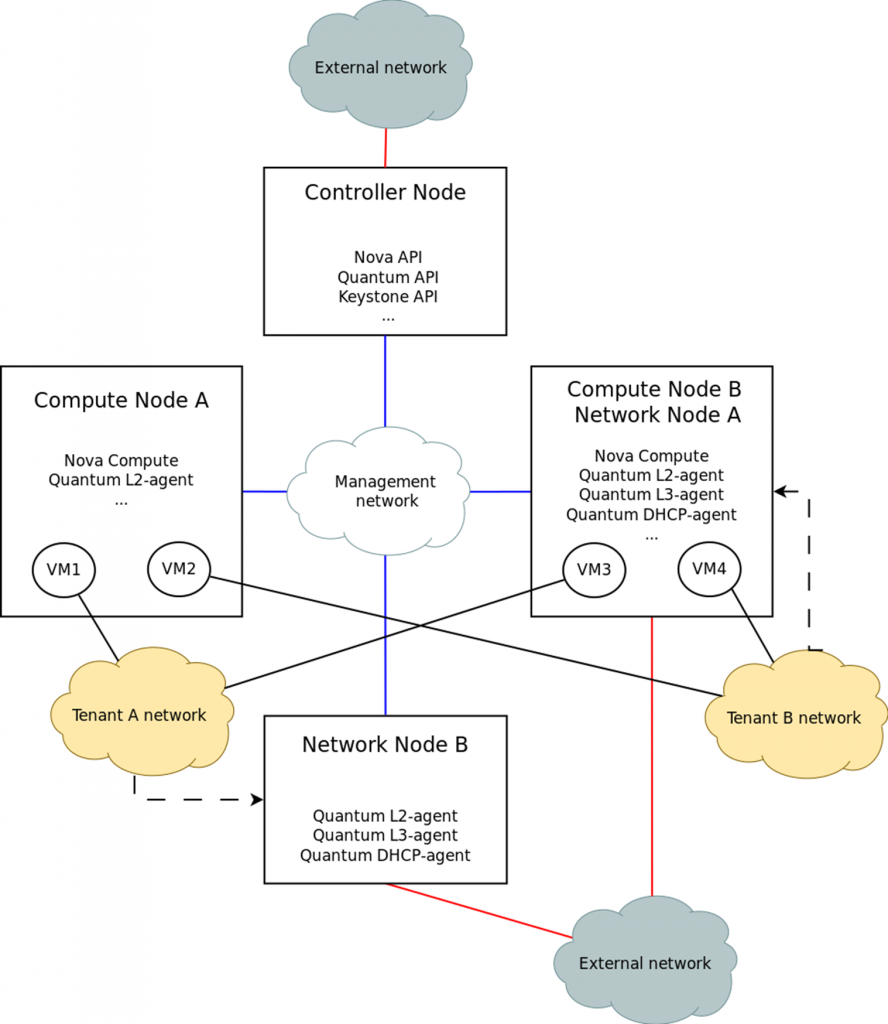

The key difference between a typical networking setup in Folsom (as shown in Figure 1) and a typical networking setup in Quantum Grizzly (as shown in Figure 2) is that it is now possible to use several nodes running DHCP- and L3-agents to either schedule networks and routers being created between them, or simply to improve reliability of those services by hosting objects redundantly on a multitude of nodes. Any node running DHCP- or L3-agents effectively acts as a “network” node.

[caption id="attachment_443344b" align="aligncenter" width="800"]

Figure 2. The typical networking setup in Grizzly

[/caption]

A blueprint for the Quantum scheduler was registered and implemented in Grizzly’s version of Quantum. The specification defines a component object that represents a Quantum agent, a Quantum server, or any advanced service. By design, every component is responsible for reporting its state and location to the Quantum server. In fact, the definition of a component provides a unified way for communication between the Quantum server and different Quantum components.

Since components periodically report their state to the Quantum server, it’s possible to discover them and schedule requests based on information about the health and availability of each component. Although some components, such as the DHCP agent, are able to serve a single network without any request scheduling, most components require scheduling to work properly and avoid configuration conflicts.

Heartbeats, messages every component sends to the Quantum server periodically, are the cornerstone of the scheduling mechanism in Quantum. Every message contains the name of the component, the name of the host on which it’s running, and information about internal state that might be used by a scheduling algorithm. Heartbeats are sent asynchronously over the message queue, and then are handled by the Quantum server, which stores all the latest heartbeat information. Since all the components use a common message queue, the Quantum server automatically discovers any new component running in the specific Quantum setup.

When an object such as a router or a network is being created, all required services must be configured to serve it. Services are provided by different Quantum components, and configuration is limited to sending them appropriate messages. However, since multiple components providing the same service might be running within a single Quantum setup, it’s necessary to choose the one that will provide its service to a specific object.

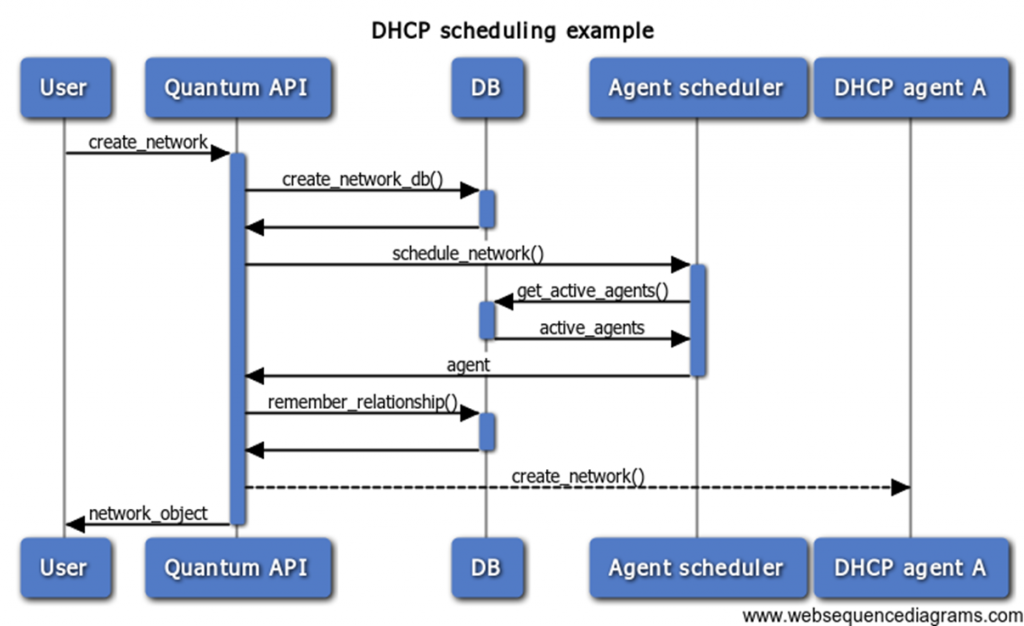

The Quantum agent scheduler receives all the required information from heartbeat messages, and the default algorithm in Grizzly schedules objects randomly to active agents. An agent is considered active if the latest heartbeat was received in a timespan that does not exceed the limit defined in the Quantum configuration file. No internal state information is taken into account. If an object was scheduled to an agent, it’s assumed that the object is hosted by the agent. Once an object is scheduled to an agent, the Quantum server creates a permanent record about that relationship and sends all requests related to that object to that specific agent. You can see an example of scheduling a network to a DHCP agent in Figure 3.

[caption id="attachment_443344c" align="aligncenter" width="800"]

Figure 3. An example of network scheduling

[/caption]

If a component goes down, it stops sending heartbeats, and after a defined period of time it is considered as an inactive component, so no new objects will be scheduled to it. As long as the host the agent was running on is up, all the services continue working. Quantum, however, does not monitor the state of existing components, and won’t migrate objects from a component that failed.

Also, the default scheduling algorithm in Quantum Grizzly does not perform any scheduling for objects that are already hosted by at least one other component. Relationships, however, can be created or modified manually. The Quantum API provides methods for binding objects to components. So if there is a need to host a single object on a multiple agents, that can be done using the CLI client or any other tool that uses the Quantum API.

Conclusion

The new Quantum agent scheduler implemented in Grizzly can actually give you:

- A general component management framework. The Quantum service collects all the information about health and status of all Quantum components in a single place, improving observability and allowing you to monitor and manage components in an easy and reliable way.

- High availability. The Quantum server schedules objects to active components, which guarantees operability of the cloud if one of the components goes down. Having several DHCP nodes in that case will keep the network operable if one of them suddenly stops working. Furthermore it’s possible to run a few distinct network nodes connected to different external networks and guarantee that instances are accessible from the Internet, even if one of the ISPs goes down.

- Scalability. Networks and routers being created are apportioned between several cluster nodes, which allows you to scale networking easily by adding new nodes. This is mostly useful for scaling external network bandwidth, but it also reduces the number of running DHCP-server processes on a single node.

Overall, Grizzly Quantum provides more flexibility, scalability, and reliability as your clusters grow.