Hyper-V integration in OpenStack platform

In tcp cloud, we keep testing the latest technologies and approaches related to OpenStack. This time we decided to see how multi-hypervisor support for the OpenStack platform really works. All of our production environment uses native KVM virtualization, but today we take a closer look at Hyper-V hypervisor integration and the possibilities of its deployment. Later posts will cover testing of PowerKVM or VMware hypervisor integrations as well.

We used instructions from Cloudbase company to conduct our testing. This company maintain support for Microsoft technology for OpenStack services. When integrating any hypervisor into OpenStack there are two important questions to ask:

- Is there any Nova compute driver for provisioning the virtualization platform?

- What networking models is usable to use with Neutron?

The first question is the easier, since the OpenStack at Icehouse release supports almost all virtualization platforms like Xen, KVM, VMWare, Hyper-V and PowerKVM. There is a Nova driver for Hyper-V starting from Grizzly release (April 2013) and thanks to Cloudbase there is even a simple installer for Microsoft Windows Server 2008 R2, 2012 and 2012 R2. The installer is has following features::

- Avoids conflicts by setting up independent Python environment

- Installs and registers all required dependencies

- It dynamically generates nova.conf based on the parameters specified by the user

- If necessary, creates a new Hyper-V switch.

- Registers Nova Compute as a system service and starts it

- Registers Neutron Hyper-V agent as a service and starts it

- Allows to use the Microsoft iSCSI Initiator

- Allows use of Hyper-V Migration

- FreeRDP for Hyper-V console access

The second question is a little more complex, because practically the whole OpenStack and cloud computing in general is always about a good network architecture. Hyper-V provides two ways to integrate Neutron network service.

- Neutron Hyper-V agent - A standard driver for the neutron, which runs under ML2 plugin on the Network Node (OpenStack controller in our case) and is included in the basic installer from Cloudbase.

- Neutron openvswitch for Hyper-V - This is an attempt to create a networking plugin by standard Neutron approach. The aim is to enable the hyper-v build SDN network type VXLAN and GRE. Unfortunately, this solution is still in it’s beta and is not usable for production environments.

During our testing we tried both possibilities, but we decided to stick with the standard Hyper-V plugin for Neutron for it’s stability.

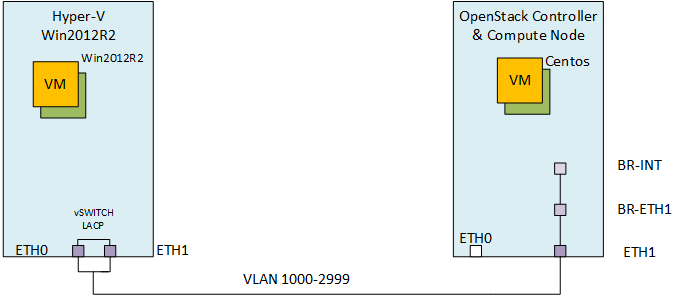

We have created following dedicated multi-hypervisor topology for our testing purposes:

OpenStack Controller

We used physical servers to perform the tests: 2x Intel Xeon X5650 @ 2.67GHz, 128 GB RAM, 80 GB LUN connected via SAN to IBM Storwize V7000.

We chose CentOS 6.5 - 2.6.32-431.29.2.el6.x86_64 - Kickstart installation for OS.

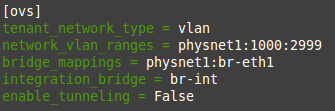

The server performs the role of the control element of OpenStack platform and provides all services (Keystone, Cinder, Glance, Nova, Neutron), along the MySQL database, and RabbitMQ. In tcp cloud we use the configuration tool SaltStack (blog) to deploy OpenStack cloud and other products. Therefore we launched new controller with the standard configuration for KVM within an hour. Networking was built on Neutron ML2 plugin (Modular Layer 2 plugin), with the type of VLAN (Virtual LAN), driver type HyperV. Range of VLANs 1000-2999.

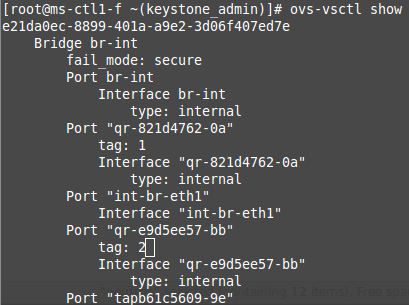

It was also necessary to modify the configuration of the OpenvSwitch:

[root@ms-ctl1-f ~]# ovs-vsctl add-port br-eth1 eth1

[root@ms-ctl1-f ~]# ovs-vsctl add-br br-eth1

Creating the new network will create a new port as well:

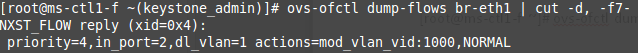

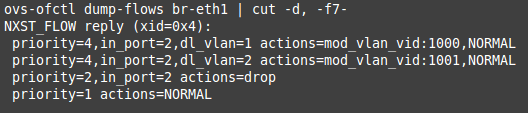

Output below shows that the VLAN ID with the tag 2 is 1000:

/etc/neutron/plugin.ini

Compute Node

Compute node was separately built server with the same hardware as KVM compute/controller node with MS Windows Server 2012R2 and Hyper-V Server 2012 as OS.

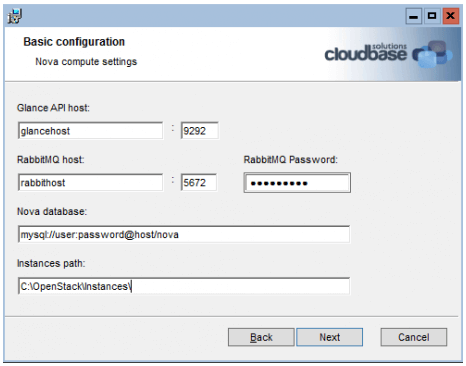

To install Nova Compute service we used the automatic installation from web cloudbase. It lets you to setup authentication type(in our case keystone), APIs adresses and their ports, all passwords for Nova and more.

Testing

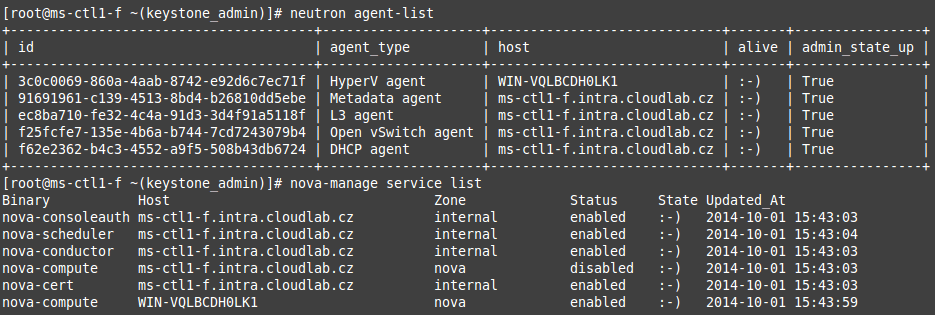

To launch instances only on the Hyper-V server we had to disable nova-compute service on the controller:

[root@ms-ctl1-f ~]# nova-manage service disable --host=ms-ctl1-f.intra.cloudlab.cz --service=nova-compute

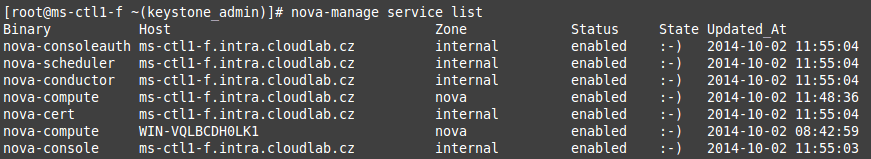

After restarting the services we can verify that the Hyper-V agent on server WIN-VQLBCDH0LK1 is working properly:

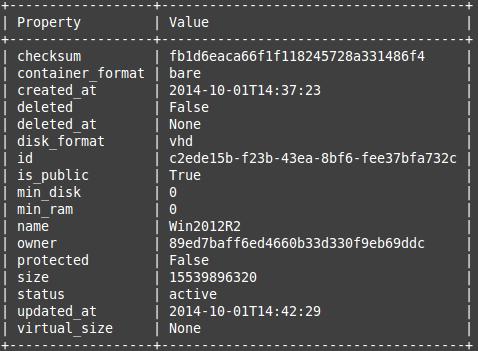

Image registration for Hyper-V server:

We chose Windows Server 2012 R2 in standard VHDX format.

[root@ms-ctl1-f ~]#glance image-create --name "Win2012R2" --disk-format=vhd --container-format=bare --is-public True --progress < windows_server_2012_r2_standard_eval_hyperv_20140530.vhdx

Launching instance:

This is possibie either from OpenStack dashboard, or by the command line inferface:

[root@ms-ctl1-f ~]#nova boot --image "Win2012R2" --flavor m1.medium --availability-zone nova:ms-ctl1-f.intra.cloudlab.cz --nic net-id=a6f04f57-be72-4f2b-8e14-b7b6e57d7ce4 Win2012R2

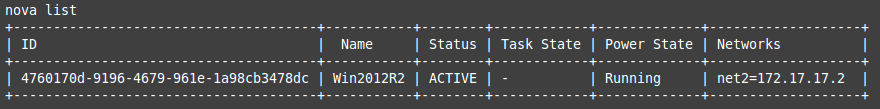

Running instance:

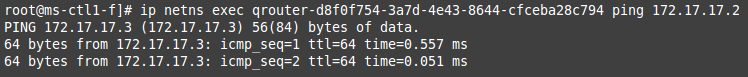

Ping from router to the instance:

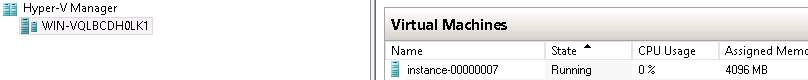

New server is displayed as a running instance with a generic name in Hyper-V Manager.

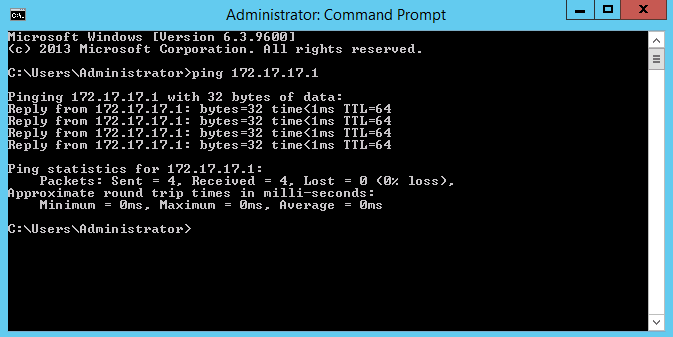

After connecting to the instance we can test ping back to the router:

The aim of our test was to find out whether it’s possible to deploy OpenStack in multi-hypervisor way, so we reallowed the nova-compute service on the controller:

[root@ms-ctl1-f ~]# nova-manage service enable --host=ms-ctl1-f.intra.cloudlab.cz --service=nova-compute

Image registration of CentOS 6.4:

[root@ms-ctl1-f ~]#glance image-create --name "CentOS6.4" --disk-format=qcow2 --container-format=bare --is-public True --progress < CentOS6.4

Creating a new network and subnet:

[root@ms-ctl1-f ~]#neutron net-create net1 --provider:network_type vlan --provider:physical_network physnet1 --provider:segmentation_id 1001

[root@ms-ctl1-f ~]#neutron subnet-create net1 172.17.16.0/24 --name sub2

Check:

Instance launch:

[root@ms-ctl1-f ~]#nova boot --image "CentOS6.4" --flavor m1.small --availability-zone nova:ms-ctl1-f.intra.cloudlab.cz --nic net-id=a6f04f57-be72-4f2b-8e14-b7b6e57d7ce4 centos

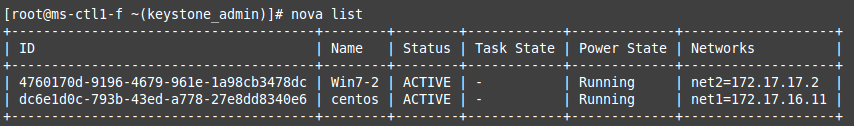

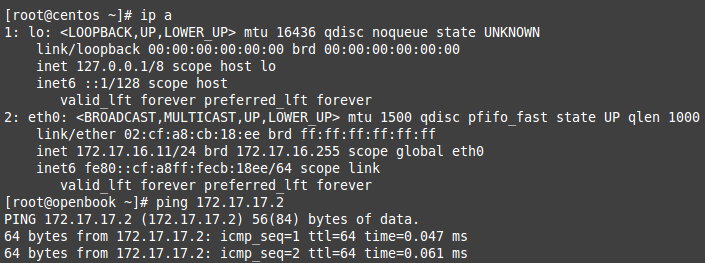

Finally, the ping from instance running on KVM to instance running on Hyper-V server:

Finally, we would like to say to our surprise, is it really possible to run a hybrid environment of KVM and Hyper-V virtualization under a single platform. Thanks to simple usage of use of shared storage under Hyper-V it’s easy to perform a live migration or run MS SQL or other Microsoft applications directly on their native virtualization. However, due to the impending Juno release it will be very difficult to integrate new technologies as a DVR, etc.

Another interesting fact is that this entire environment wes built without any license fee!

Vlastimil Mikes & Jakub Pavlík

tcp cloud engineers