Block Live Migration in OpenStack environment

As a company dedicated to deploying a variety of cloud solutions built on OpenStack we are dealing on daily basis with issues of high availability, disaster recovery and security. High Availability is definitely one of the key terms in building any cloud infrastructure. In this paper we would like to take a closer look at the area of Live Migration and how it can be ensured in the OpenStack environment built on KVM.

Before I turn to the possibilities of live migration and its testing, I would like to quote our colleague Raj Geda from Symantec who responded to the question, "How do you solve the Symantec live migration?" said, "This is a cloud! There is no live migration". I admit that I was amused and at first it seemed like nonsense. I can’t imagine that I responded as follows when meeting with a customer operating on VMware infrastructure. However, as a curiosity, Symantec has deployed OpenStack over 3 data centers, thousands of hypervisors and tens of thousands of virtual instances. Symantec provides high availability at the application level, not at the operating system level, which is the future, in which we would like to be part of.

It is important to always remember if you really need features such as DRS, vMontion, shared storage, etc. How often do you perform a live migration? And doesn’t live migrationg serve us only for maintenance of hypervisor? Do we really want to moving virtual instances without our knowledge? Surely any live migration although reliable presents a risk to production applications. Finally, it is also a question of money, if we really want for this function pay such a high price in the form of license fees.

Live migration is used when it is necessary to move the live instance from one compute node to another. An example might be moving to less loaded, or higher performance node. During cloud maintenance, this function is used to achieve zero downtime.

OpenStack VM live migration can be divided into three categories:

Shared

This type of migration require some sort of shared storage that is accessible to all compute node in cluster (eg. NFS)

Non shared - block

Among the nova-compute hypervisors does not require shared storage. For virtual disk transfer is used a network connection from the source node to the destination. This means that the vdisk transmission takes longer time and during the transmission VM power will be reduced.

Volume

Same as shared migration due to shared drives visibility for all nodes in the cluster.

In our test, we will focus on non-shared - block migration.

Architecture

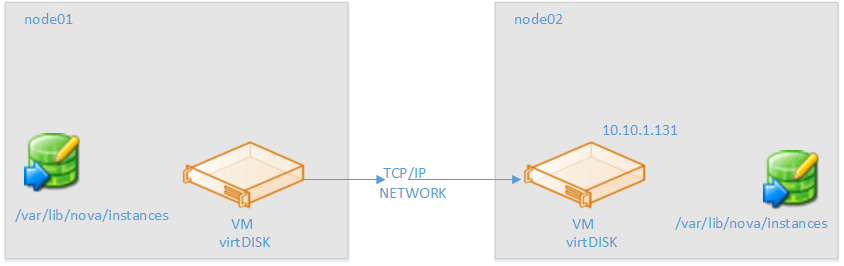

In this example we have 2 node nova-compute cluster (node01 and node02).

The aim is to migrate a running instance from nova-compute node named node02 to node01 and verify its availability.

Typically, the OpenStack environment, each compute node manages instances locally in a dedicated directory (eg. /var/lib/nova/instances).

nova hypervisor-list

+----+---------------------+

| ID | Hypervisor hostname |

+----+---------------------+

| 3 | node01 |

| 6 | node02 |

+----+---------------------+To enable block migration in OpenStack, you must edit the libvirtd and nova.conf on each nova-compute node.

/etc/nova/nova.conf

live_migration_flag=VIR_MIGRATE_UNDEFINE_SOURCE,VIR_MIGRATE_PEER2PEER,VIR_MIGRATE_LIVE/etc/libvirt/libvirtd.conf

listen_tls = 0

listen_tcp = 1

auth_tcp = “none”Adding line live_migration_flag to /etc/nova/nova.conf will enable the "true" live migration, ie zero downtime during migration.

After adjustments, it is necessary to restart libvirt and nova-compute:

service libvirt-bin restart

service nova-compute restartTesting

From OpenStack dashboard we can run new instance called: migratevm (ubuntu 14.04.1):

root@node01:~# virsh list

Id Name State

----------------------------------------------------

5 instance-00000113 running

root@node01:~# nova list

+--------------------------------------+--------------+---------+------------+-------------+------------------------+

| ID | Name | Status | Task State | Power State | Networks |

+--------------------------------------+--------------+---------+------------+-------------+------------------------+

| cb28ddea-0a06-45ce-a8bf-da3d20c2da81 | migratevm | ACTIVE | - | Running | sharednet1=10.10.1.131 |

+--------------------------------------+--------------+---------+------------+-------------+------------------------+

We'll find out on which node instance runs (OS-EXT-SRV-ATTR:host):

root@node01:~# nova show cb28ddea-0a06-45ce-a8bf-da3d20c2da81 | grep hypervisor

| OS-EXT-SRV-ATTR:hypervisor_hostname | node01 |

Instances is running on the node named node01, now we can run live migration on node02:

root@node01:~# nova live-migration --block-migrate cb28ddea-0a06-45ce-a8bf-da3d20c2da81 node02

Nova show inst_id shows vm in migrating state:

root@node01:~# nova show cb28ddea-0a06-45ce-a8bf-da3d20c2da81

+--------------------------------------+----------------------------------------------------------+

| Property | Value |

+--------------------------------------+----------------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | nova |

| OS-EXT-SRV-ATTR:host | node01 |

| OS-EXT-SRV-ATTR:hypervisor_hostname | node01 |

| OS-EXT-SRV-ATTR:instance_name | instance-00000113 |

| OS-EXT-STS:power_state | 1 |

| OS-EXT-STS:task_state | migrating |

| OS-EXT-STS:vm_state | active |

| OS-SRV-USG:launched_at | 2014-10-27T09:32:40.000000 |

| OS-SRV-USG:terminated_at | - |

| accessIPv4 | |

| accessIPv6 | |

| config_drive | |

| created | 2014-10-27T09:32:37Z |

| flavor | m1.small (2) |

| hostId | 6d4bfd179e2f89ee459eee64d1750b20a66a0cfb378df8126cc63762 |

| id | cb28ddea-0a06-45ce-a8bf-da3d20c2da81 |

| image | Ubuntu14.04 (8b5999c2-5ebd-4f60-858f-64da95956d00) |

| key_name | - |

| metadata | {} |

| name | migratevm |

| os-extended-volumes:volumes_attached | [] |

| security_groups | default |

| sharednet1 network | 10.10.1.131 |

| status | MIGRATING |

| tenant_id | 4cddb258aa9c46808efd270342e4df25 |

| updated | 2014-10-27T12:00:58Z |

| user_id | f9458a75087946a7b342ef9a25a12615 |

+--------------------------------------+----------------------------------------------------------+

During migration, we let the ping run on an instance. At a time when the install finishes copying the disc and will switch to the new node is visible longer ping response, but without failure:

root@node02:~# ping 10.10.1.131

PING 10.10.1.131 (10.10.1.131) 56(84) bytes of data.

64 bytes from 10.10.1.131: icmp_seq=311 ttl=64 time=0.322 ms

64 bytes from 10.10.1.131: icmp_seq=312 ttl=64 time=4208 ms

64 bytes from 10.10.1.131: icmp_seq=313 ttl=64 time=3209 ms

64 bytes from 10.10.1.131: icmp_seq=314 ttl=64 time=2209 ms

64 bytes from 10.10.1.131: icmp_seq=315 ttl=64 time=1208 ms

64 bytes from 10.10.1.131: icmp_seq=316 ttl=64 time=209 ms

64 bytes from 10.10.1.131: icmp_seq=317 ttl=64 time=0.333 ms

64 bytes from 10.10.1.131: icmp_seq=318 ttl=64 time=0.319 ms

--- 10.10.1.134 ping statistics ---

330 packets transmitted, 329 received, 0% packet loss, time 328989ms

Command nova show migratevm shows running instance on second node - node02.

root@node01:~# nova show migratevm

+--------------------------------------+----------------------------------------------------------+

| Property | Value |

+--------------------------------------+----------------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | nova |

| OS-EXT-SRV-ATTR:host | node02 |

| OS-EXT-SRV-ATTR:hypervisor_hostname | node02 |

| OS-EXT-SRV-ATTR:instance_name | instance-00000113 |

| OS-EXT-STS:power_state | 1 |

| OS-EXT-STS:task_state | - |

| OS-EXT-STS:vm_state | active |

| OS-SRV-USG:launched_at | 2014-10-27T09:32:40.000000 |

| OS-SRV-USG:terminated_at | - |

| accessIPv4 | |

| accessIPv6 | |

| config_drive | |

| created | 2014-10-27T09:32:37Z |

| flavor | m1.small (2) |

| hostId | 5931121941d579fa05ffb24fff1b09b3ac5114bdf6134c76c736e574 |

| id | cb28ddea-0a06-45ce-a8bf-da3d20c2da81 |

| image | Ubuntu14.04 (8b5999c2-5ebd-4f60-858f-64da95956d00) |

| key_name | - |

| metadata | {} |

| name | migratevm |

| os-extended-volumes:volumes_attached | [] |

| progress | 0 |

| security_groups | default |

| sharednet1 network | 10.10.1.131 |

| status | ACTIVE |

| tenant_id | 4cddb258aa9c46808efd270342e4df25 |

| updated | 2014-10-27T09:50:19Z |

| user_id | f9458a75087946a7b342ef9a25a12615 |

+--------------------------------------+----------------------------------------------------------+

Conclusion

We have shown that even without shared storage, we are able to perform uninterrupted live migration of running virtual instances. Over the last two years for KVM hypervisors there has been really great progress and also confirmed that it is necessary to always use the most recent version of the kernel, KVM and libvirt. In the same testing 6 months ago on a CentOS 6.4 platform block live migration wasn’t uninterrupted.

This method can be used for less critical applications where it’s necessary to create a "cost effective" (no storage or licenses) solution and even still have the ability to migrate virtual servers for maintenance.

For critical applications, it is appropriate to use the shared storage from which the instance start (boot from volume) via iSCSI or Fibre Channel. In this way it is possible to perform standard live migration similar to classic VMware infrastructure.

Jakub Pavlik & Vlastimil Mikes

tcp cloud engineers