Cloud Prizefight: OpenStack vs. VMware

There have been many discussions in the cloud landscape comparing VMware and OpenStack. In fact, it's one of the most popular topics among those thinking about using OpenStack. I've given a couple of presentations to the SF Bay OpenStack Meetup on this topic and many peers have asked me to write about it. To make it interesting, I've decided to structure this as a head-to-head bout between these two cloud software contenders competing for usage in your data center. Some aspects I will consider are open vs. closed systems, Enterprise legacy application vs. cloud-aware application, free vs. licensed, and well-tested features vs. controlling your own roadmap.

The contenders will be judged in the following categories: design, features, use cases, and value. The categories will be scored on a 10-point scale and then tallied to determine the winner.

Round 1: Design

VMware's suite of applications was built from ground up, starting with the hypervisor. The ESX(i) hypervisor is free and provides an excellent support structure for VMware orchestration products such as vSphere and vCloud director. The software is thoroughly tested and has a monolithic architecture. Overall, the product is documented and has a proven track history—used by high-profile customers on a multi-data-center scale. That said, the system is closed and the roadmap is completely dependent on VMware's own objectives, with no control in the hands of consumers.

OpenStack is open source and no single company controls its destiny. The project is relatively new—2 years young—but has huge market momentum and the backing of many large companies (see: companies supporting OpenStack). With so many companies devoting resources to OpenStack it has no dependencies to a single company. However, the deployment and architecture have a steeper learning curve than VMware and the documentation is not always current.

| Scoring - Design | ||||

| Category | Design | Features | Use Cases | Value |

| OpenStack | 7 | |||

| VMware | 8 | |||

Round 2: Features

VMware vMotion

vMotion is the building block for three vSphere Features: DRS, DPM, and host maintenance. Live Migration allows for the movement of a VM from one host to another with zero downtime and it's supported via shared storage. When a VM is moved from one host to the other, the RAM state and data should be migrated to the new host. Since the storage is shared, the data does not need to move at all—rather the link to the data changes from one host to another. This makes for a fast transition time, since the data does not need to be copied/moved via a network.

*As of vSphere 5.1, VMware supports live migration without shared storage.

OpenStack Live Migration

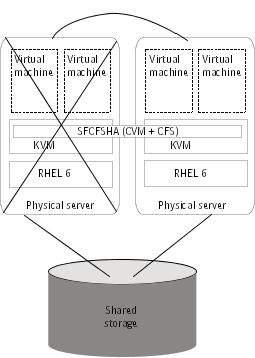

[caption id="attachment_440646" align="aligncenter" width="255"] KVM live migration allows a guest operating system to move to another hypervisor. The main dependency here is shared storage.[/caption]

KVM live migration allows a guest operating system to move to another hypervisor. The main dependency here is shared storage.[/caption]

KVM live migration allows a guest operating system to move to another hypervisor . You can migrate a guest back and forth between an AMD host and an Intel host. A 64-bit guest can only be migrated to a 64-bit host, but a 32-bit guest can be migrated to either. During the live migration process the guest should not be affected by the operation, and the client can continue to perform operations while the migration is running. The main dependency here is shared storage, which can be expensive.

Live migration requirements:

- Distributed filesystem for the VM storage, such as NFS or GlusterFS

- Libvirt must have the listen flag enabled

- Each compute node (hypervisor) must be located in the same network/subnet

- The authentication must be configured as

noneor via SSH with SSH keys - The mount point used by the DFS must be the same at each location

OpenStack Block Migration

In OpenStack, shared storage is not required for VM migration since there is support for KVM block migration. In this scenario, both the RAM state and data are moved from one host to another. The downside is that it takes longer and requires CPU resources on both host and target to make the move. There are use cases when block migration is a better option than the classic live migration because the ability to use only the network to migrate your VMs can be priceless. This is especially true if the main purpose of moving VMs is host maintenance. Some deployments do not have shared storage but still need to perform maintenance on compute nodes like kernel or security upgrades and VM downtime is not acceptable. In this case, a block migrate is the ideal solution.

In OpenStack, shared storage is not required for VM migration since there is support for KVM block migration. In this scenario, both the RAM state and data are moved from one host to another. The downside is that it takes longer and requires CPU resources on both host and target to make the move. There are use cases when block migration is a better option than the classic live migration because the ability to use only the network to migrate your VMs can be priceless. This is especially true if the main purpose of moving VMs is host maintenance. Some deployments do not have shared storage but still need to perform maintenance on compute nodes like kernel or security upgrades and VM downtime is not acceptable. In this case, a block migrate is the ideal solution.

Use case:

A user doesn’t have a distributed filesystem, and doesn't want one for understandable reasons—perhaps the costs of enterprise storage and network latency—but wants to be able to perform maintenance operations on hypervisors without interrupting VMs.

VMware DRS and DPM

DRS leverages vMotion by dynamically monitoring the resource usage of VMs and hosts during runtime and moving the VMs to efficiently load balance across hosts.

Use cases:

- Provision time: Initial placement of VMs based on any automation that you have set up

- Runtime: DRS distributes virtual machine workloads across the ESX(i) hosts. DRS continuously monitors the active workload and the available resources and performs VM migrations to maximize workload performance.

DPM leverages vMotion by moving VMs off hosts and shutting them down during periods of lower load to reduce power consumption. When the load grows, DPM turn hosts on again and spawns VMs on them.

OpenStack Scheduler

OpenStack includes schedulers for compute and volumes. OpenStack uses a scheduler to select an appropriate host for your VM based on a list of attributes and filters set by the cloud admin. The scheduler is quite flexible and can support a wealth of filters, but consumers can also write a custom filter using JSON. While the scheduler is flexible, and highly customizable, it's not quite a replacement for DRS for the following reasons:

- The data used by the scheduler to determine which host to provision to is the static data derived from the nova database. That is, host A already has four VMs, so let's choose a new host for the next VM.

- The scheduler only influences the placement of VMs at provision time, it will not move VMs while they are running. Dynamic data can be supported by an external monitoring solution such as Nagios working with the scheduler; however, the scheduler will still only affect the initial placement of VMs.

VMware HA

VM-level high availability (HA) in vSphere allows for the spawning of the same VM to a different host when the VM or ESX(i) host fails. It should not be confused with fault tolerance (FT), as HA is not fault tolerant. HA simply means that when something fails, it can be restored in reasonable amount of time via self healing. HA is protection for virtual machines from hardware failure: If a failure does occur, HA reboots or powers on the VM on a different ESX(i) host, so it is basically a cold power on from a crash. In HA, the services are susceptible to downtime.

OpenStack HA

Currently, there is no official support for VM-level HA in OpenStack—it was initially planned for the Folsom release but was later dropped/postponed. There is currently an incubation project called Evacuate that is adding support for VM-level HA to OpenStack.

VMware Fault Tolerance

VMware FT livestreams the state of a virtual machine and all changes to a secondary ESX(i) server for protected VMs. Fault tolerance means that when either the primary or secondary VM's host dies, as long as the other stays up, the VM keeps running. Contrary to marketing myths, this still doesn't help you if an application crashes or during patching. Once it crashes, it crashes on both sides, and if you stop a service to patch, it will also stop on both VMs. It protects you against a single host failure with no interruption to the protected VM. True application-level clustering like MSCS or WCS are required to protect against application-level failures. Considering other FT limitations, like high resource usage, double, ram, disk, and cpu, and bandwidth to livestream the state this is one of the less used VMware features. It requires twice the memory as memory cannot dedupe via TPS across hosts. It also uses CPU lockstepping to sync every CPU instruction between the VMs. This results in the limitation that only single vCPU VMs can be protected with FT.

OpenStack FT

In OpenStack, there is no feature comparable to FT and there are no plans to introduce this feature. Furthermore, instructions mirroring is not supported by KVM (the most common hypervisor for OpenStack)

| Scoring - Features | ||||

| Category | Design | Features | Use Cases | Value |

| OpenStack | 7 | 6 | ||

| VMware | 8 | 9 | ||

As you can see, there are some gaps between VMware and OpenStack, and there are also gaps within those features. OpenStack and VMware are in a battle, with both companies matching each other's features. This is good for OpenStack as VMware is extremely expensive and OpenStack is free. VMware has spent lots of money developing these features, which need to be passed on the the consumer, whereas OpenStack features are developed by the community and can be consumed freely.

Round 3: Use Cases

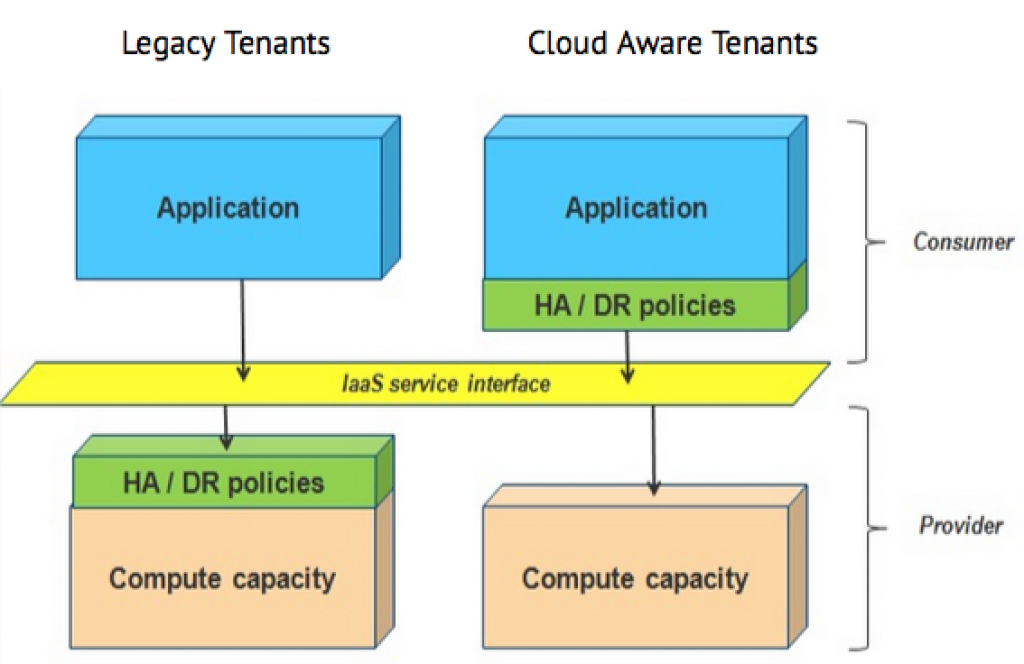

Before we can assign value to the features above we need to think about use cases. In the cloud ecosystem there are two types of tenants that consume infrastructure as a service: cloud-aware and legacy. Cloud-aware applications will handle HA and DR policies on their own, while legacy application will rely on the infrastructure to provide HA and DR. See diagram below from an VMware cloud architect's article.

[caption id="attachment_441836" align="aligncenter" width="574"] Cloud-aware apps manage their own HA and DR policies; legacy application rely on the infrastructure. (Source: VMware)[/caption]

Cloud-aware apps manage their own HA and DR policies; legacy application rely on the infrastructure. (Source: VMware)[/caption]

Common characteristics of cloud-aware applications:

- Distributed

- Stateless/soft-state

- Failover is in the app

- Scaling is in the app

Common characteristics of legacy applications:

- Client-server architecture

- Typically a monolithic chunk of code; hard to scale horizontally

- Failover is in the infrastructure

- Scaling is in the infrastructure

Pet vs. Cattle

| VS. |  |

| Scoring - Use Cases | ||||

| Category | Design | Features | Use Cases | Value |

| OpenStack | 7 | 6 | 8 | |

| VMware | 8 | 9 | 6 | |

Round 4: Value

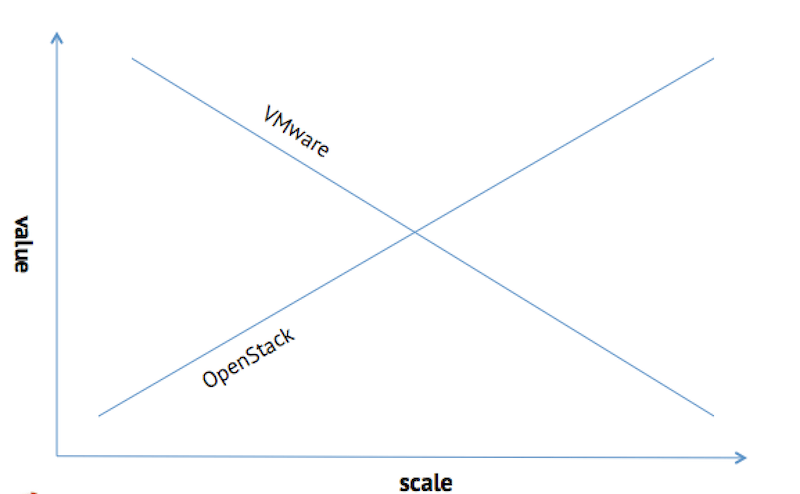

Here comes the final round that decides it all. However, the answer to which provides the best value isn't as clear, since it depends on scale. While OpenStack is free to use, it does require a lot of engineering resources and expertise. It will also require more effort to architect and stand up, since it supports so many deployment scenarios and the installations patterns are never the same. VMware has associated costs for licensing but should be easier to install and get running. Also, it's easier to get resources trained with using point and click interfaces vs. a command line.

In short, OpenStack has a higher initial cost, but as projects scale, you will get more value, due to the lack of licensing fees. VMware will be cheaper for smaller installations, but the value will diminish as you increase scale. That being said, cloud use cases are trending toward large scale and as people get more experience with OpenStack, the initial costs will be lower.

| Scoring - Value | ||||

| Category | Design | Features | UseCases | Value |

| OpenStack | 7 | 6 | 8 | 10 |

| VMware | 8 | 9 | 6 | 6 |

And the winner is...

| Scoring - Final | |||||

| Category | Design | Features | UseCases | Value | Total |

| OpenStack | 7 | 6 | 8 | 10 | 31 |

| VMware | 8 | 9 | 6 | 6 | 29 |

Author's note

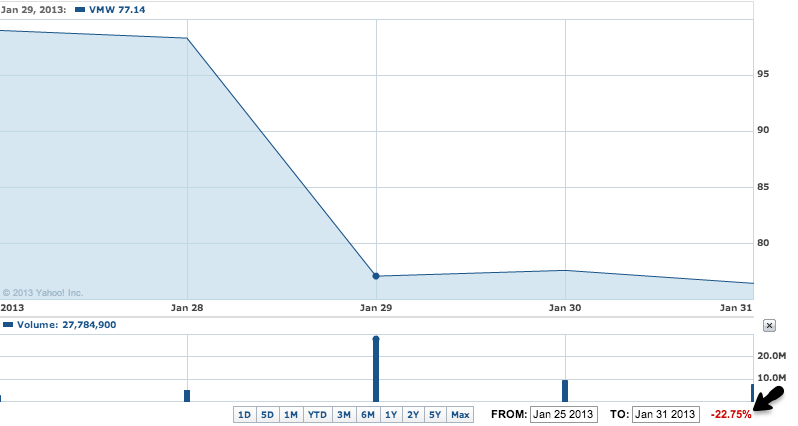

Coincidentally, at the time of this writing, VMware stock plunged 22 percent in a single day on January 29, with market analysts citing the lack of a clear and well-defined cloud strategy and weak outlook...

I understand that some of you may disagree with my scoring and the fact that I assigned the same weight to each category. Truth be told, the scoring is not perfect and completely subjective, since the reason for its existence was to make the material a little more interesting. That said, please feel free to give your opinions in the comments!