Integrating VMware ESXi with OpenStack & OpenContrail

As OpenStack Integrators, we often have discussions with customers regarding interoperability of VMware and KVM virtualization on daily basis. We usually answer questions like:

- Can we use KVM for production? Is it not just for experimental purposes?

- How to integrate current VMware vSphere environment?

- Is it possible to use hybrid environment KVM together with VMware?

- What limitations and obstacles we get with hybrid environment?

Today I would like to try answers regarding networking on hybrid hypervisor environment. Vanilla Neutron implementation using openVSwitch cannot be used with VMware infrastructure. Only Nova vCenter driver (ESXi driver deprecated in Icehouse release) for compute exists, Cinder VMDK driver for volumes. For the networking virtualization only Nova flat networks can be used. Of course, there is an effort to provide at least Neutron VLAN driver, but the development is done outside the OpenStack community. The reason is that VMware forces customer to buy its SDN solution VMware NSX and use Neutron NSX plugin. This is not open source solution and it is very hard to get it for testing. Therefore the only way how to get the SDN features and stay in open source world (with the possibility of commercial support) is OpenContrail, which introduces the VMware ESXi support in new release 2.0.

This first implementation has limited capability of Contrail compute node functionality at hypervisors running the VMware ESXi virtualization platform. Next releases will provide vCenter driver with Live Migration, DRS, and other VMware functions. Currently Contrail supports only the standard VMware vSwitches and port groups in ESXi. Distributed vSwitch functionality will be supported by the vCenter plugin too. Today the most important thing is to verify functions and measure real performance.

To run OpenContrail on ESXi 5.5, virtual machine is spawned on a physical ESXi server and compute node is configured on that virtual machine. For OpenContrail on ESXi, only compute node functionality is provided.

The Contrail compute virtual machine can be downloaded as VMDK from Juniper website. Contrail ESXi VM for Ubuntu 12.04.3 LTS is a standard ubuntu image with few modifications:

- Python bit string library

- Script to set hostname from DHCP (dhclient-exit-hooks)

- Disk size is set 60GB that can hold Contrail packages repository

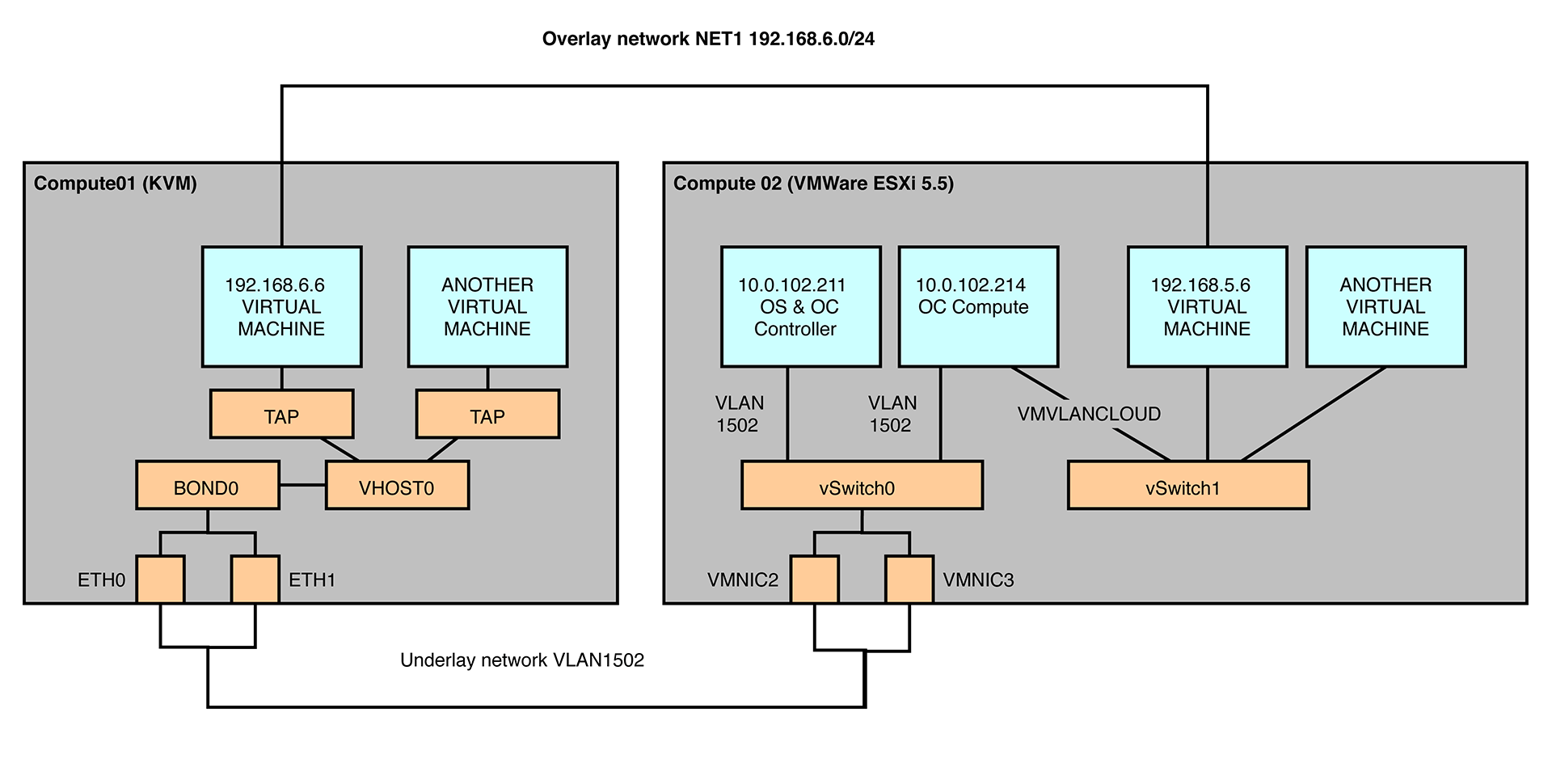

Architectural Scenario

We show how to deploy hybrid KVM&VMware OpenStack cloud through Contrail fabric deploy scripts. We dedicated two physical IBM HS22V blade servers with two 10Gbps NICs for this purpose.

- OpenStack and Contrail Controller - 10.0.102.211

- KVM Compute - 10.0.102.215

- Contrail Compute VM - 10.0.102.214

- ESXi Management IP - 10.0.103.234

We use the MPLSoverGRE for overlay encapsulation.

Our performance testings scenario covers:

- Deployment and installation

- Functional testing

- Performance testing

Testing Scenario Deployment

Following list covers the deployment process:

- Download contrail-install-packages_2.0-22~icehouse_all.deb from http://www.juniper.net/support/downloads/?p=contrail#sw and copy it to /tmp/ on the first server for further system installation.

- Install package through

dpkg -i /tmp/contrail-install-packages_2.0-22~icehouse_all.deb

- Run the setup.sh script. This step will create the Contrail packages repository as well as the Fabric utilities needed for provisioning:

cd /opt/contrail/contrail_packages

./setup.sh

- Fill in the testbed definitions file.

from fabric.api import env

#Management ip addresses of hosts in the cluster

#Controller

host1 = 'root@10.0.102.211'

#Contrail-compute-vm

host2 = 'root@10.0.102.214'

#KVM compute node

host3 = ‘root@10.0.102.215’

#External routers if any

ext_routers = []

#Autonomous system number

router_asn = 64512

#Host from which the fab commands are triggered to install and provision

host_build = 'root@10.0.102.211'

#Role definition of the hosts.

env.roledefs = {

'all': [host1,host2,host3],

'cfgm': [host1],

'openstack': [host1],

'control': [host1],

'compute': [host1,host2,host3],

'collector': [host1],

'webui': [host1],

'database': [host1],

'build': [host_build],

'storage-master': [host1],

'storage-compute': [host1],

# 'vgw': [host1], # Optional, Only to enable VGW. Only compute can support vgw

# 'backup':[backup_node], # only if the backup_node is defined

}

#Openstack admin password

env.openstack_admin_password = 'password'

#Hostnames

env.hostnames = {

'all': ['a0s1','contrail-vm-compute2']

}

env.password = 'password'

#Passwords of each host

env.passwords = {

host1: 'password',

host2: 'c0ntrail123',

host3: ‘password’,

# backup_node: 'secret',

host_build: 'password',

}

#For reimage purpose

env.ostypes = {

host1:'ubuntu',

host3:’ubuntu’,

}

#Following are ESXi Hypervisor details.

esxi_hosts = {

#Ip address of Hypervisor

'esxi_host1' : {'ip': '10.0.103.234',

#Username and password of ESXi Hypervisor

'username': 'root',

'password': 'password',

#Uplink port of Hypervisor through which it is connected to external world

'uplink_nic': 'vmnic2',

#Vswitch on which above uplinc exists

'fabric_vswitch' : 'vSwitch0',

#Port group on 'fabric_vswitch' through which ContrailVM connects to external world

'fabric_port_group' : 'VLAN1502',

#Vswitch name to which all openstack virtual machine's are hooked to

'vm_vswitch': 'vSwitch1',

#Port group on 'vm_vswitch', which is a member of all vlans, to which ContrailVM is connected to all openstack VM's

'vm_port_group' : 'VMvlanCloud',

#links 'host2' ContrailVM to esxi_host1 hypervisor

'contrail_vm' : {

'name' : 'contrail-vm-compute2', # Name for the contrail-compute-vm,

'mac' : '00:50:56:8c:c6:85', # VM's eth0 mac address, same should be configured on DHCP server

'host' : host2, # host string for VM, as specified in the env.rolesdef['compute']

'vmdk' : '/opt/ESXi-v5.5-Contrail-host-Ubuntu-precise-12.04.3-LTS.vmdk', # local path for the VMDK file

},

# Another ESXi hypervisor follows

},

}

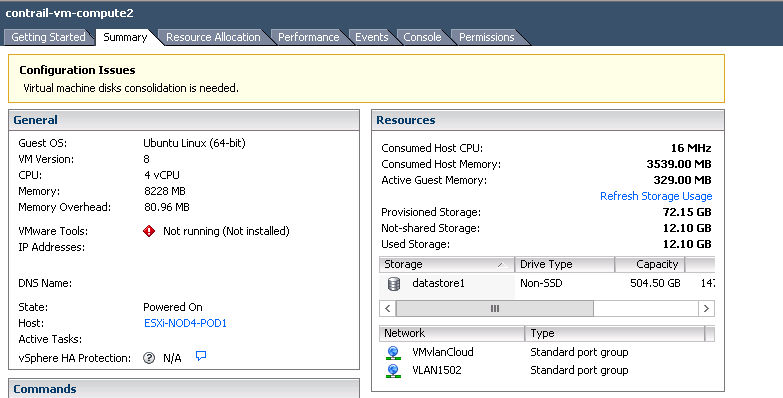

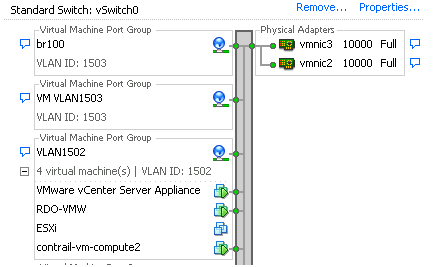

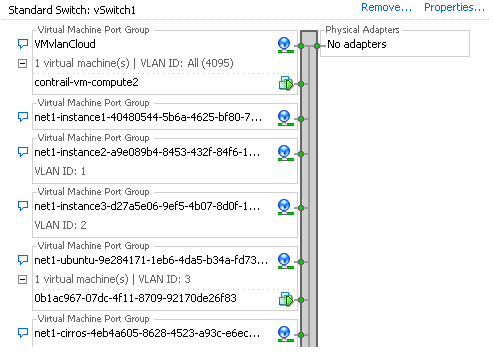

- The fab prov_esxi task configures the ESXi hypervisor (ssh root must be permitted) and launches the Contrail compute VM. This task creates or verify two vSwitches, port groups and deploys VM with two NICs connected to these two vSwitches. Installation can be also verified in vSphere client, where you should see two vSwitches.

Following screens show newly created vSwitches and Port Groups. We use ESXi for other purposes as well, so there are more Port Groups.

After installation vSwitch1 contains only one contrail-compute-vm. This screen was captured after instances were provisioned.

- If you do not have DHCP server in your network, you need to set static IP address inside of contrail-compute-vm. Default password for root user is c0ntrail123.

- When the contrail-compute-vm is ready, you can continue with standard Contrail installation commands:

fab install_pkg_all:/tmp/<pkg>

fab install_contrail

fab setup_all

- You can verify the VMware configuration at contrail-compute-vm. Look at Nova service configuration at/etc/nova/nova.conf.

[DEFAULT]

…

compute_driver = vmwareapi.ContrailESXDriver

…

[vmware]

host_ip = 10.0.103.234

host_username = root

host_password = cloudlab

vmpg_vswitch = vSwitch1

…

/etc/contrail/contrail-vrouter-agent.conf

…

[HYPERVISOR]

# Everything in this section is optional

# Hypervisor type. Possible values are kvm, xen and vmware

type=vmware

mode=

…

# Physical interface name when hypervisor type is vmware

vmware_physical_interface=eth1

…

Now we can start with actual performance testing the newly deployed hybrid infrastructure.

Functional Testing

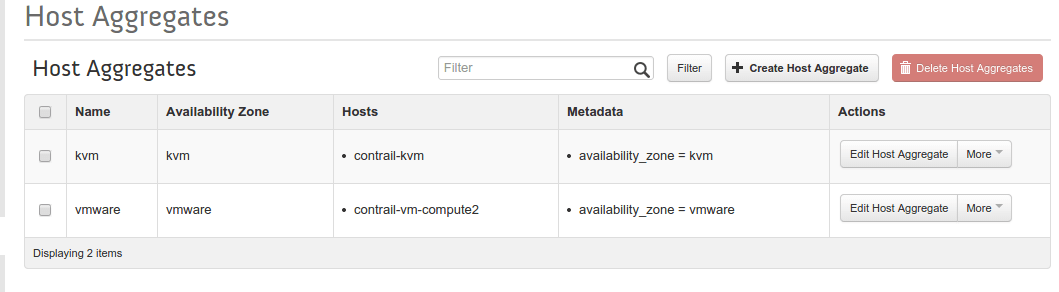

At this point, we have created one virtual network net1 with address range 192.168.6.0/24 in OpenStack. Before the actual provisioning, we have created two availability zones based on hypervisor type. We have provisioned several VMs to different availability zones/hypervisors.

Nova service status can be verified through the command nova-manage service list.

root@contrail:~# nova-manage service list

Binary Host Zone Status State Updated_At

nova-scheduler contrail internal enabled :-) 2015-02-08 21:47:55

nova-console contrail internal enabled :-) 2015-02-08 21:47:55

nova-consoleauth contrail internal enabled :-) 2015-02-08 21:47:55

nova-conductor contrail internal enabled :-) 2015-02-08 21:47:55

nova-compute contrail-kvm kvm enabled :-) 2015-02-08 21:47:55

nova-compute contrail-vm-compute2 vmware enabled :-) 2015-02-08 21:47:52

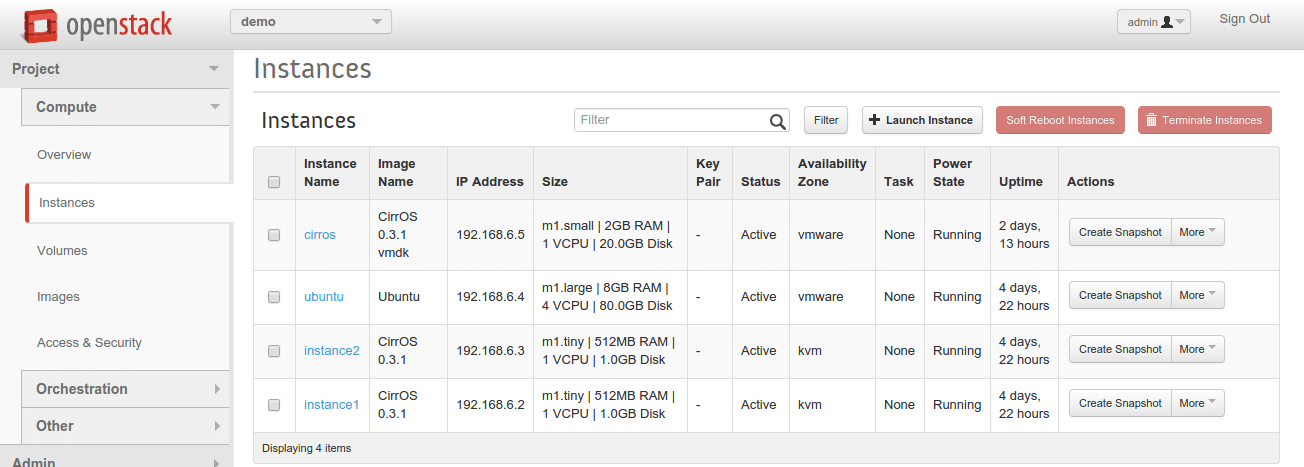

We provisioned 4 instances together, 2 at each hypervisor.

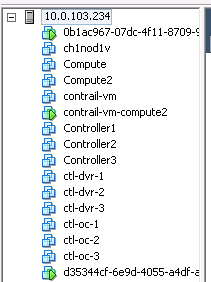

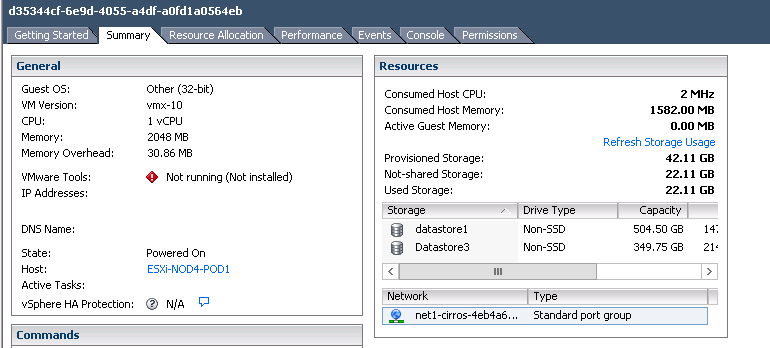

VMware ESXi compute driver creates instances with its UUID as name.

Following sceen shows detail of Ubuntu server instance at ESXi hypervisor.

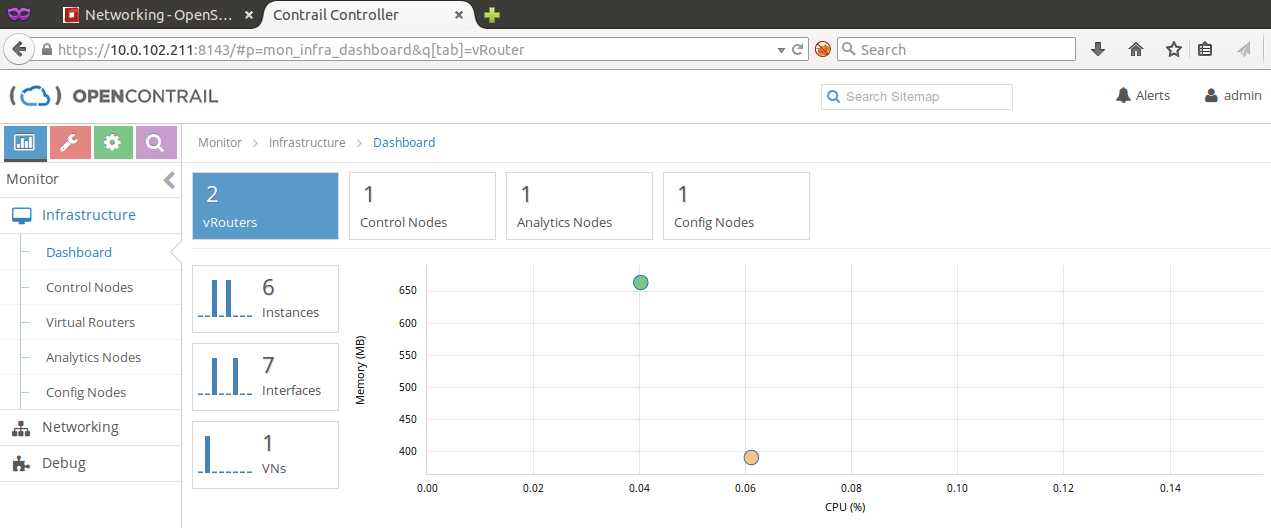

OpenContrail Dashboard shows contrail-compute-vm as a standard vRouter node.

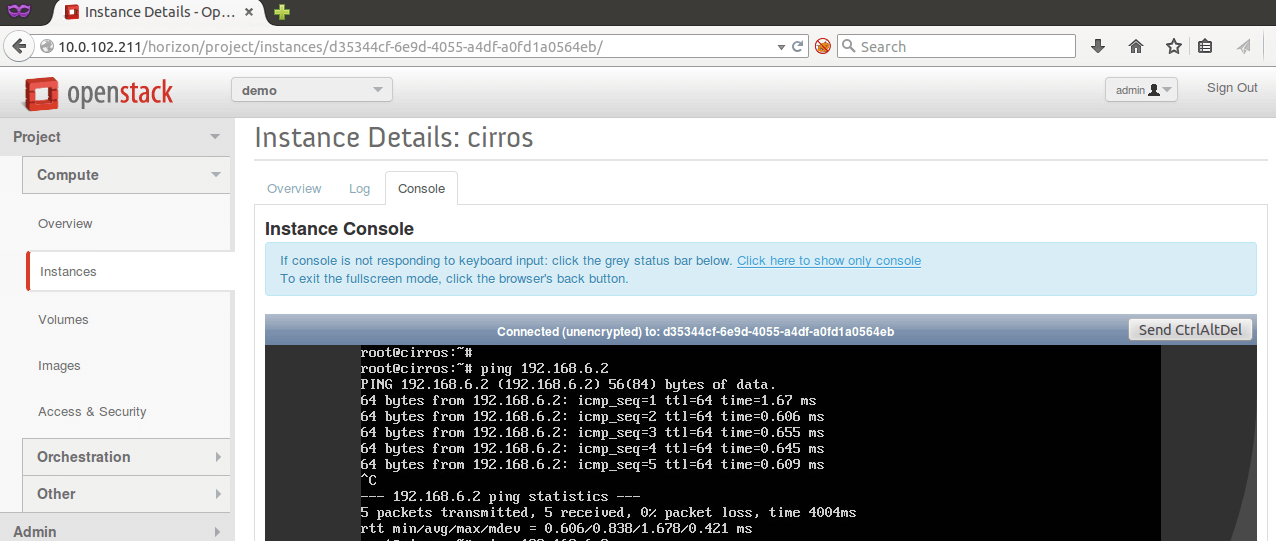

This screen shows that standard OpenStack user cannot see any difference between instances running ESXi or KVM virtualization. We are able to ping virtual machines running on different hypervisors, that are connected to the same overlay network.

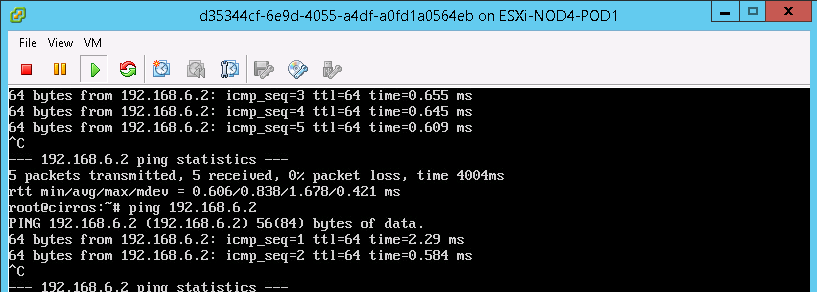

Administrator user can also use vSphere console to see the very same virtual machine console output.

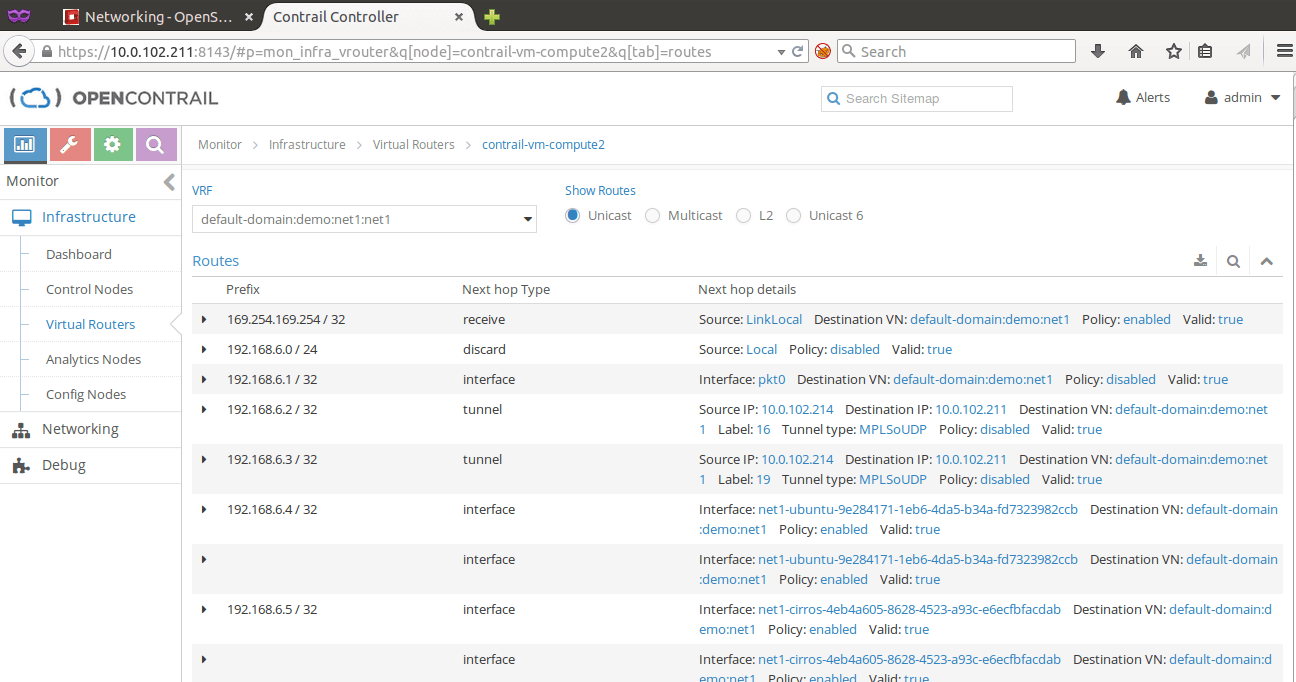

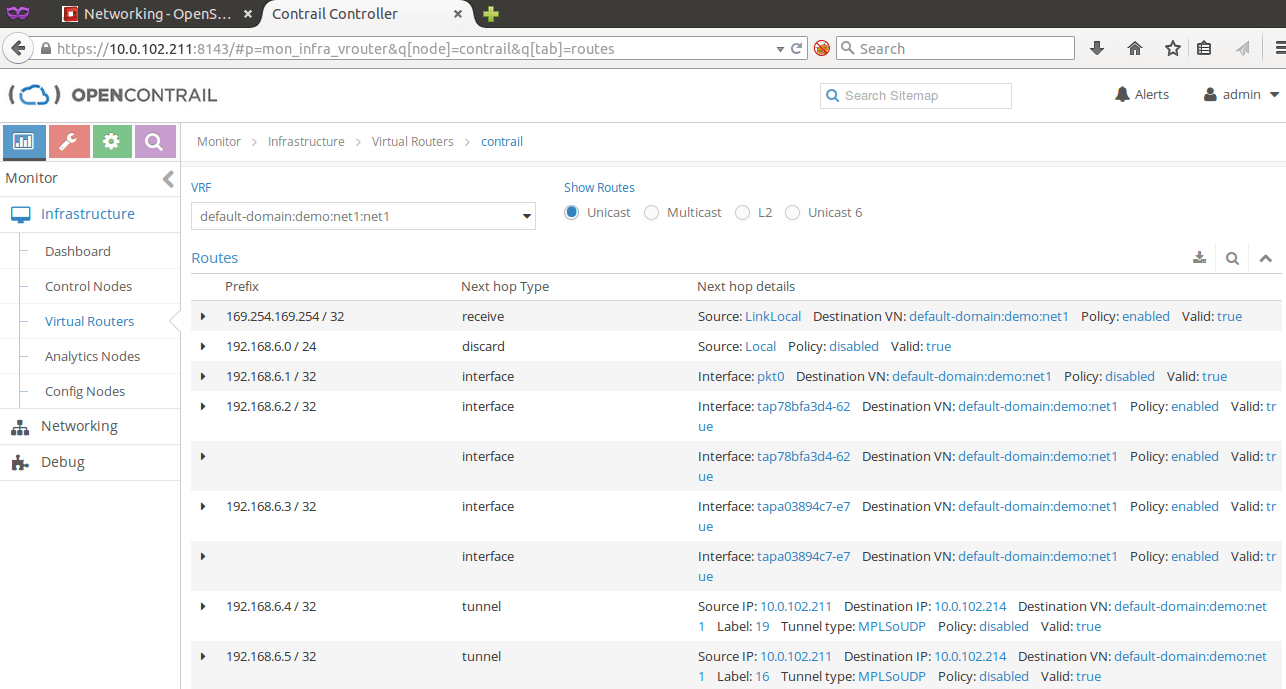

Following images show the routes at both vRouters in OpenContrail dashboard.

Routes at the ESXi hypervisor:

Routes at the KVM hypervisor:

Performance Testing¶

The most important and interesting part is the performance throughput measurements between VMware ESXi and KVM hypervisors. The amazing near the line bandwidth (9.6Gbit at 10Gbps links) of OpenContrail is well known, but how fast is the VMware contrail-compute-vm? We decided to use iperf for the network testing. Iperf is a tool to measure maximal TCP bandwidth, allowing the tuning of various TCP/UDP parameters and characteristics. Iperf reports bandwidth, delay jitter, datagram loss and other useful information.

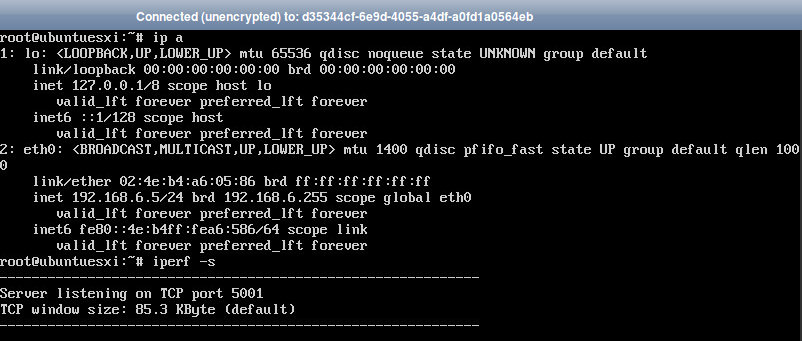

- UbuntuESXi instance is running at ESXi hypervisor with IP address 192.168.6.5. We start the iperf server against which we will conduct the tests.

- CentosKVM is the second instance and running at KVM hypervisor with IP address 192.168.6.6.

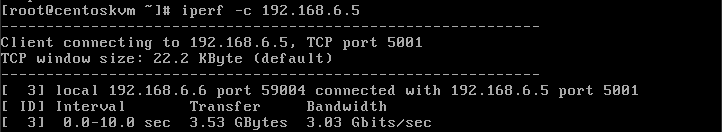

The first measurement is one session from centoskvm to ubuntuesxi.

As you can see form screenshot, the network throughput was 3.03Gbits/sec without any special performance tuning.

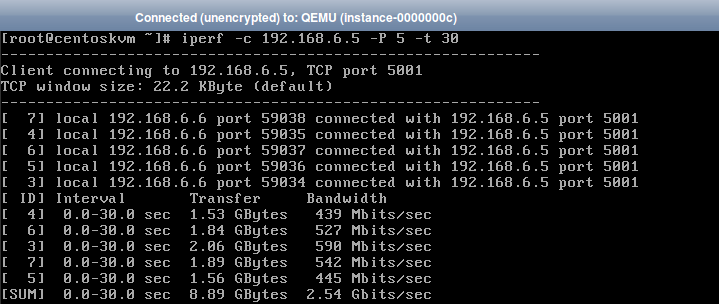

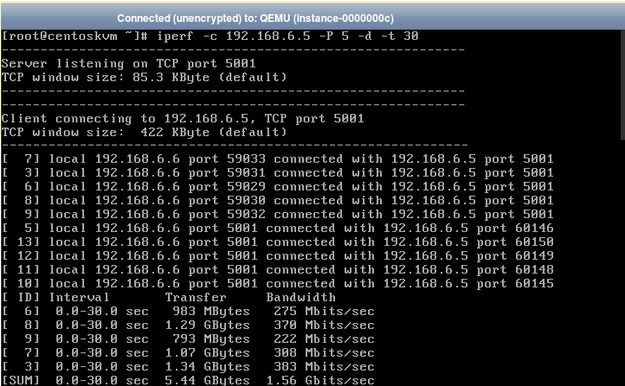

3.The next test launches 5 parallel sessions in dual testing mode for 30 seconds.

- The final test launches 5 parallel sessions in dual testing mode for 30 seconds.

You can see that the maximum bandwidth reached is about 3Gbits/sec.

Conclusion

We proved that hybrid hypervisor environment is possible to build and it enables quite good performance out of box. The bandwidth of 3Gbits/sec is similar to what other SDN solutions like VMware NSX or PlumGrid can achieve. These solutions also use virtual machines as gateways (south-nord traffic) for OpenStack Neutron.

Future release will support vCenter with advanced VMware features and possibility to integrate it with ToR OVSDB switches through VXLAN encapsulation. At that case there will be no need to have dedicated service virtual machine at each ESXi.

Jakub Pavlik

tcp cloud platform engineer