3 Myths of OpenStack Distros, parts 2 and 3

OpenStack Distro Myth #2: All distributions are equally stable and interoperable

If we were really being proper, we'd state Myth #2 this way: All distributions have the same level of testing, which leads to identical levels of stability and interoperability.Like Myth #1, this statement seems seductively plausible. After all, everyone uses the same source code, which has been tested by the same community. How can distributions have different levels of testing? And since testing ultimately controls stability and interoperability, how can those be any different?

For this myth to be debunked, two things need to be true:

- A distribution vendor needs to perform validation and security testing more comprehensively than the community

- This testing should result in hardening in the form of bug and security fixes, removal of unstable community patches, and distro-specific selection/configuration of middleware

Testing of Openstack Distributions

The community performs extensive testing of OpenStack, as you can see in this overview of the OpenStack continuous integration (CI) process. The community runs a test suite called Tempest to ensure stability and interoperability, and it also performs security testing, but it's not meant to be enterprise-level, comprehensive testing. It's meant to simply ensure that OpenStack "works", and it has some limitations:- Tempest checks OpenStack through public APIs and interfaces. It deliberately does not do any testing through private or implementation specific interfaces. For example, it does not go directly into a database to check entries or touch any hypervisor.

- The OpenStack CI spins up an entire cluster on a single VM, so no multi-node tests are run (with the exception of the Ironic project).

- The community process does not include conducting High-availability (HA) mode testing.

- Third party drivers are tested by their respective vendors, with varying coverage.

For example, Mirantis OpenStack performs a large number of tests over & above what the community does:

| Type of Test | Purpose |

| Functional Validation | Control plane API validated with OpenStack Tempest and functional validation accomplished with Mirantis Health Check (Mirantis OSTF test-suite). Test suites are run in parallel to find dependent failures. |

| System Validation | Typical usage scenarios, corner cases, customer-found issues, etc. are validated using an automated framework for testing (Swarm test suite). System includes Ceph. |

| Scale Lab | OpenStack Rally is used used to benchmark and ensure API performance under load, and the Mirantis Shaker test suite confirms dataplane performance in an environment with hundreds of nodes and tens of thousands of instances. |

| Package Validation | Packages are individually validated with dependencies against target operating environments. Packages are then deployed into a working environment according to Mirantis reference architectures. |

| Destructive Testing (Resiliency and HA) | Node, Controller, and Service failure injection is simulated to validate proper functioning of high-availability. |

| Negative Testing | Injection of invalid data to a system under load. For example, providing an invalid set of parameters in an API request. |

| Longevity Testing | Long-term testing of OpenStack under load to find intermittent hard-to-find issues and issues that might arise after accumulation of time. For example, behavior when a log disk fills up. |

| Compatibility Testing | Mirantis Partner Integrations team provides hardware and software compatibility testing for all Mirantis Certified technologies. It’s worth comparing the interop matrix (servers, networking, storage, host OS/ hypervisor, CMP, PaaS, container frameworks etc.) of various distro vendors. See Mirantis interop matrix. |

| Security Testing | Vulnerability scans, threat analysis and other security scans in addition to community testing. |

Hardening of OpenStack Distributions

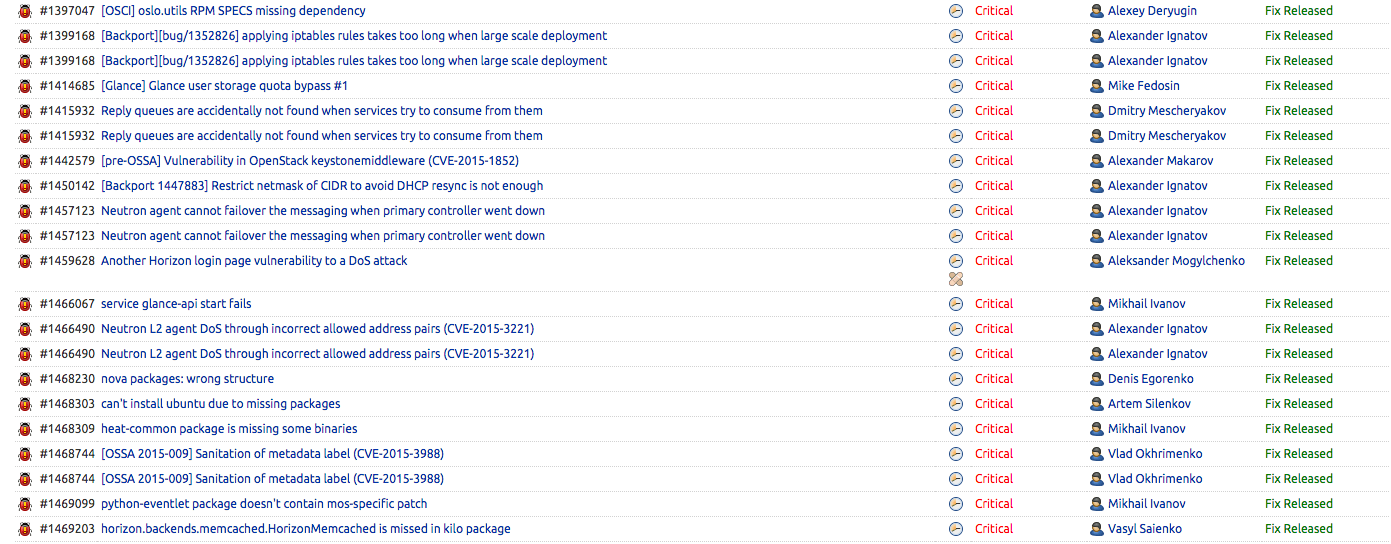

The additional testing must result in hardening of the distribution. In the last blog, we already looked at aspects of hardening such as HA middleware choices and configurations, reference architecture, and removal of unstable patches, so let’s focus this discussion on bug & security fixes. The testing described above resulted in around 330 bug fixes in Mirantis OpenStack 7.0. Out of this, the number of critical bug fixes is close to 40. That’s 40 good reasons not to use the community version of Kilo! (Aside: since our fixes are developed upstream, they will eventually make their way into a community release, so as a benefit, you're not getting locked into our distro.) Here’s a partial screenshot of our fixes.

Let’s explore a few of these to see what the impact might be for those using a release without these fixes:

| Issue | Reason for being critical | How did we find this and how did we fix it? |

| Keystone UPDATE and DELETE operations fail intermittently. | If you intend to remove credentials or update them for a particular user, an intermittent failure could result in an inconsistent state. | Resiliency (HA) testing found this issue. The issue did not occur in a non-HA configuration. Fix details. |

| Horizon intermittently becomes non-responsive after a long duration. | This directly impacts availability of your cluster. | Longevity testing found that certain processes were filling up memory; but this was a very slow occurrence that required a long time to reproduce. Fix details. |

| Applying iptables rules takes too long in a large install. | May disallow launching new VMs since the boot process could time-out. | Scale testing found this error when we built a cluster with about 1,000 VMs. Fix details. |

OpenStack Distro Myth #3: Vendor distributions should be available concurrent with a given community release

With Myth #2 debunked, this is an easy one to tackle. By definition, the process of testing, bug & security fixes, middleware tuning and unstable patch removal is going to take time. I call this duration the “hardening window”. How long should this window be? If it is simply a few days or weeks, you should be worried that your distro vendor is simply repackaging community bits without actually hardening them. If it’s over 6 months, that’s too long, since the next OpenStack release would have already come out. At Mirantis we feel that 4-5 months is ideal. It’s not so short that we have to cut corners, and it’s not so long that we start running into the next release.While 4-5 months might seem like a long while to wait to get your hands on the latest new features of a release, the benefits of waiting are well worth it. Firstly, the added hardening, as previously mentioned, improves reliability and resiliency of the infrastructure you’re running your business on. Secondly, during this window, your vendor should continue to port fixes of bugs found by others in the community into the commercial distro. (We do at Mirantis.) That means that when you do get the commercial distro, it's a much more mature and stable product than the community version.

Summary

Although it may seem as though all distros should be equally stable and interoperable, and that they should be released with the corresponding community version, the realities of hardening software mean that that's simply not possible. Make sure that your vendor is providing you with the best possible version of OpenStack for you, not just hitting the market as quickly as possible.At Mirantis, we're very proud of the stability of Mirantis OpenStack 7.0. I'd like to invite you to download it now and see for yourself how well-tested and stable the release is. We welcome your feedback about this blog and about our product.