Getting OpenStack on Optimized Hardware

OpenStack gives users enormous configurational flexibility. Its components an be set up in many different ways to suit almost any vision of how a datacenter should work and scale. And OpenStack is adaptable: during initial experiments, users are often surprised and pleased to discover that OpenStack will run credibly on almost any hardware, from a collection of Virtual Box VMs running on a laptop to a handful of mismatched baremetal servers. Automated deployment tools like Fuel can even make this process wizard-simple: auto-discovering available hardware, enabling testing and creating a working cloud at a single button-press on essentially any available hardware.

Learn more about OpenStack & Optimized Hardware. Join webcast by StackVelocity and Mirantis, this Wednesday, April 30, at 9:00 AM Pacific.

It’s easy to conclude that OpenStack “doesn’t care very much about hardware.” That’s a two edged sword, as it creates the optimistic impression that scaled-out pilot-based OpenStack environments:

end up as a large version of a small scale pilot, with the same topology, and

can be built with similar ease, and

can be supported in production.

Now, this thinking even works, up to a point -- but the result, as some pilot projects have learned the hard way, is neither performant nor reliable, and can end up being far more complex, costly and troublesome to maintain than initially projected.

OpenStack reality check

As it turns out, implementing OpenStack at scale can be quite challenging, at a minimum a two-step process. First, you need to create a workable model for a future-proofed, reliable nodeset (deployment, controller, compute, storage, multi-layer networking, all in high availability (HA) configuration); and then determine how to scale out this model manageably, reliably, quickly and cost-effectively, so that the resulting datacenter can grow at need without bottlenecks.

As many have discovered at implementation, choosing and integrating the right hardware is important in making this process smooth and functional. And -- as is often the case in open source -- the lure of custom-engineering “white box” hardware solutions is strong. But there’s an intrinsic problem here, which is that direct use of white-box hardware makes you responsible, both for engineering your own solutions, and for assembling, deploying and managing them at your premise.

There are complexities aplenty here, and traps for the unwary. They range from the relatively simple (e.g., you order hardware with inaccessible power buttons) to the more complicated (e.g., you fail to account for switching redundancy in your unitized node design). Further, not everyone has the resources and bandwidth to smoothly manage a white-box deployment strategy at scale: process to manage orders, maintain inventory, do reliable assembly, QA and installation.

Use of pre-integrated, QA’d white-box hardware aggregations can make a lot of sense, and in fact, can duplicate the good results sought when implementors carefully configure their own white-box setup from compatible components. The goal is to produce an optimized hardware setup for a usable-at-scale OpenStack instance that can then be exploited as a unit for subsequent scale-out. Organizations like RackSpace and IO.com, who have lately deployed DC-a-a-S offerings using OpenStack and Open Compute “vanity-free” hardware, have, by reports, performed exactly the same intermediate engineering step: creating a viable rack instance that can itself be scaled, which can then be cloned to add reserve capacity at need.

OpenStack Pilot Production Rack

As a result of this collaboration StackVelocity and Mirantis have teamed to produce a highly-customizable, scale-ready rack building block that can be used to anchor and build out a complete enterprise or service provider cloud; it’s documented in a white paper that details the OpenStack Pilot Production Rack (OPPR). StackVelocity is a business unit of global contract manufacturer Jabil Circuit, among the largest custom electronics makers in the world, and a supplier both to many name-brand equipment vendors and -- through a series of new initiatives including the StackVelocity unit -- direct to Tier 1 end-users.

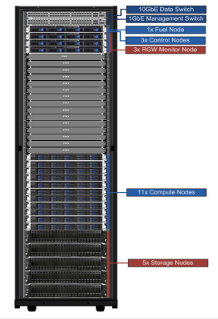

The OPPR is intended both as a reference solution and as a product for sale -- in its base configuration, it supports a production pilot workload of about 450 guest instances, expandable to 1100 instances when available rack slots are fully populated. The base configuration (see illustration) tops the rack with a 10GB data switch and 1GB management switch -- a configuration that segregates management and live traffic in a scalable way, while eliminating the need to configure VLANs extending beyond the local rack. Below these are one Fuel node for automated cluster deployment and sanity testing on the rack, and three controller nodes in active/hot standby/standby configuration under HAproxy. Each controller supports a MySQL instance replicated with Galera, using certification-based replication to permit opportunistic single-node transactions under global coordination that supports transaction rollback, atomicity and order (ACID model). Neutron (again in active/hot standby/standby configuration) L4-L7 networking is also supported here, along with the Ceph storage monitor.

The Ceph system -- occupying the bottom-most five rack spaces in the OPPR base configuration -- manages file system, block and object storage. Availability and data integrity of the underlying object store here is managed by distributing objects over five zones, effectively reproducing the functionality of conventional RAID 5. The configuration permits decentralization of volume storage, so that if, for example, a host compute node fails, instances can be migrated to an available host without network or storage reconfiguration.

Networking in the OPPR is both conceptually simple and sophisticated. Five VLANs (A-E) segregate FUEL network traffic, OpenStack management traffic, storage, public and floating IPs (routable IP space), and the private network. These are mapped to individual physical NICs -- one on the FUEL node, two on the controller nodes (e.g. ,for FUEL and management traffic), three on storage nodes (FUEL, management, storage), and four on compute nodes.

Major components in the OPPR are integrated on 64-bit Ubuntu.

Service and support comes with the OPPR purchase: one year of hardware support (parts and labor) with 8x5 remote assistance and advance replacement is the default option, and help with deployment, extended hardware warranty, onsite replacement and spares inventory and 24x7 support are available. The Mirantis OpenStack Starter Pack, also default, includes support for up to 20 nodes, one year of 8x5 support for software, and Mirantis OpenStack Training, with additional support, training and professional services available.