Understanding your options: Deployment topologies for High Availability (HA) with OpenStack

When I was designing my first OpenStack infrastructure I struggled to find information on how to distribute its numerous parts across the hardware. I studied a number of documents, including Rackspace's reference architecture (which was once at http://referencearchitecture.org but now seems to be mothballed). I also went through the design diagrams presented in the OpenStack documentation. I must admit that back in those days I had only basic knowledge of how these components interact, so I ended up with a pretty simple setup: one "controller node" that included everything, including API services, nova-scheduler, Glance, Keystone, the database, and RabbitMQ. Under that I placed a farm of compute workhorses. I also wired three networks: private (for fixed IP traffic and server management), public (for floating IP traffic), and storage (for iSCSI nova-volume traffic).

When I joined Mirantis, it strongly affected my approach, as I realized that all my ideas involving a farm of dedicated compute nodes plus one or two controller nodes were wrong. While it can be a good approach from the standpoint of keeping everything tidily separated, in practice we can easily mix and match workhorse components without overloading OpenStack (e.g. nova-compute with nova-scheduler on one host). It turns out that in OpenStack, "controller node" and "compute node" can have variable meanings given how flexibly OpenStack components are deployed.

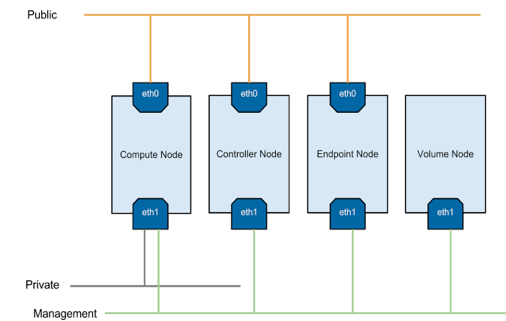

In general, one can assume that each OpenStack deployment needs to contain at least three types of nodes (with a possible fourth), which my colleague Oleg Gelbukh has outlined:

- Endpoint node: This node runs load balancing and high availability services that may include load-balancing software and clustering applications. A dedicated load-balancing network appliance can serve as an endpoint node. A cluster should have at least two endpoint nodes configured for redundancy.

- Controller node: This node hosts communication services that support operation of the whole cloud, including the queue server, state database, Horizon dashboard, and possibly a monitoring system. This node can optionally host the

nova-schedulerservice and API servers load balanced by the endpoint node. At least two controller nodes must exist in a cluster to provide redundancy. The controller node and endpoint node can be combined in a single physical server, but it will require changes in configuration of the nova services to move them from ports utilized by the load balancer. - Compute node: This node hosts a hypervisor and virtual instances, and provides compute resources to them. The compute node can also serve as the network controller for instances it hosts, if a multihost network scheme is in use. It can also host non-demanding internal OpenStack services, like scheduler,

glance-api, etc. - Volume node: This is used if you want to use the

nova-volumeservice. This node hosts thenova-volumeservice and also serves as an iSCSI target.

While the endpoint node's role is obvious—it typically hosts the load-balancing software or appliance providing even traffic distribution to OpenStack components and high availability—the controller and compute nodes can be set up in many different ways, ranging from "fat" controller nodes which host all the OpenStack internal daemons (scheduler, API services, Glance, Keystone, RabbitMQ, MySQL) to "thin," which host only those services responsible for maintaining OpenStack's state (RabbitMQ and MySQL). Then compute nodes can take some of OpenStack's internal processing, by hosting API services and scheduler instances.

At Mirantis we have deployed service topologies for a wide range of clients. Here I'll provide a walk-through of them along with diagrams, and take a look at the different ways which OpenStack can be deployed. (Of course, service decoupling can go even further.)

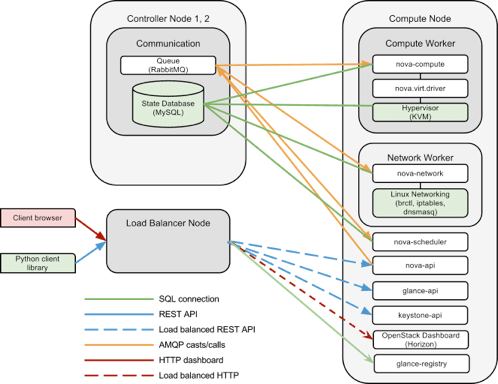

Topology with a hardware load balancer

In this deployment variation, the hardware load balancer appliance is used to provide a connection endpoint to OpenStack services. API servers, schedulers, and instances of nova-scheduler are deployed on compute nodes and glance-registry instances and Horizon are deployed on controller nodes.

All the native Nova components are stateless web services; this allows you to scale them by adding more instances to the pool (see the Mirantis blog post on scaling API services for details). That's why we can safely distribute them across a farm of compute nodes. The database and message queue server can be deployed on both controller nodes in a clustered fashion (my earlier post shows ways how to do it). Even better: The controller node now hosts only platform components that are not OpenStack internal services (MySQL and RabbitMQ are standard Linux daemons). So the cloud administrator can afford to pass the administration of them to an external entity, Database Team, a dedicated RabbitMQ cluster. This way, the central controller node disappears and we end up with a bunch of compute/API nodes, which we can scale almost linearly.

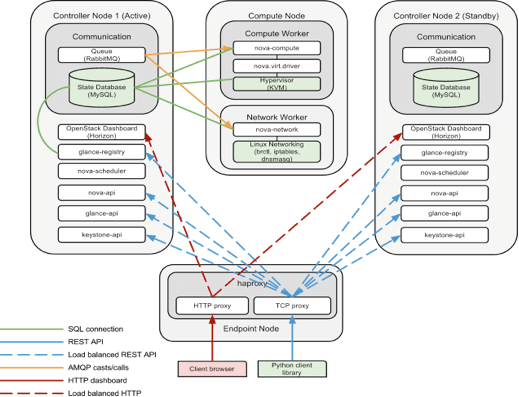

Topology with a dedicated endpoint node

In this deployment configuration, we replace a hardware load balancer with an endpoint host that provides traffic distribution to a farm of services. Another major difference compared to the previous architecture is the placement of API services on controller nodes instead of compute nodes. Essentially, controller nodes have become "fatter" while compute nodes are "thinner." Also, both controllers operate in active/standby fashion. Controller node failure conditions can be identified with tools such Pacemaker and Corosync/Heartbeat.

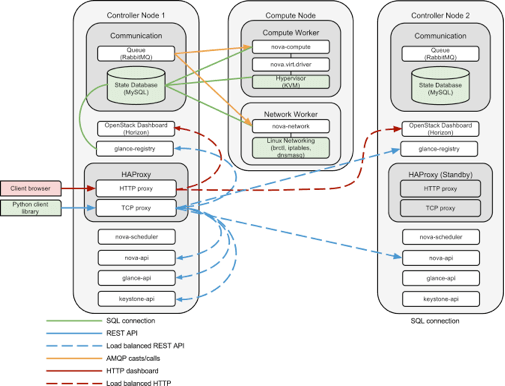

Topology with simple controller redundancy

In this deployment, endpoint nodes are combined with controller nodes. API services and nova-schedulers are also deployed on controller nodes and the controller can be scaled by adding nodes and reconfiguring HAProxy. Two instances of HAProxy are deployed to assure high availability, and detection of failures and promotion of a given HAProxy from standby to active can be done with tools such as Pacemaker and Corosync/Heartbeat.

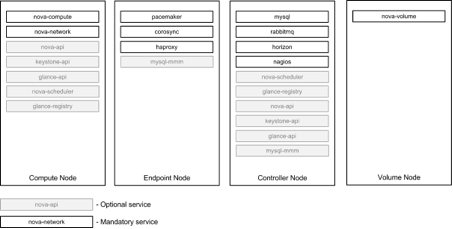

Many ways to distribute services

I've shown you service distribution across physical nodes, which Mirantis has done for various clients. However, sysadmins can mix and match them in a completely different way to suit their needs. The diagram below shows—based on our experience at Mirantis—how OpenStack services can be distributed across different node types.

Hardware considerations for different node types

The main load on the endpoint node is generated by a network subsystem. This type of node requires much of the CPU performance and network throughput. It is also useful to bind network interfaces for redundancy and increased bandwidth, if possible.

The cloud controller can be fat or thin. The minimum configuration it can hosts includes those pieces of OpenStack that maintain the system state: database and AMQP server. Redundant configuration of the cloud controller requires at least two hosts and we recommend use of network interface binding for network redundancy and RAID1 or RAID10 for storage redundancy. The following configuration can be considered the minimum for a controller node:

- Single 6-core CPU

- 8GB RAM

- 2x 1TB HDD in software RAID1

Compute nodes require as much memory and CPU power as possible. Requirements for the disk system are not very restrictive, though the use of SSDs can increase performance dramatically (since instance filesystems typically reside on the local disk). It is possible to use a single disk in a non-redundant configuration and in case of failure replace the disk and return the server back to the cluster as a new compute node.

In fact, the requirements for compute node hardware depend on customer evaluation of average virtual instance parameters and desired density of instances per physical host.

Volume controller nodes provide a persistent block storage feature to instances. Since block storage typically contains vital data, it is very important to ensure its availability and consistence. The volume node must contain at least six disks. We recommend installing the operating system on a redundant disk array (RAID1). The remaining four disks are assembled in a RAID5 array or RAID10 array, depending on the configuration of the RAID controller.

Block volumes are shared via iSCSI protocol, which means high loads on the network subsystem. We recommend at least two bonded interfaces for iSCSI data exchange, possibly tuned for this type of traffic (jumbo frames, etc.).

Network topology

OpenStack network topology resembles a traditional data center. (Other Mirantis posts provide a deeper insight into OpenStack networking: FlatDHCPManager, VlanManager.) Instances communicate internally over fixed IPs (a data center private network). This network is hidden from the world by NAT and a firewall provided by the nova-network component. For external presence, a public network is provided where floating IPs are exposed (data center DMZ). A management network is used to administer servers (data center IPMI/BMC network). We can also have a separate storage network for nova-volumes if necessary (data center storage network). The following diagram depicts the cloud topology (in this case, iSCSI traffic is shared with management traffic, however). Two networks on eth1 are just tagged interfaces configured on top of eth1 using a 802.1q kernel module.

The public network has two purposes:

- Expose instances on floating IPs to the rest of the world.

- Expose Virtual IPs of the endpoint node that are used by clients to connect to OpenStack services APIs.

The public network is usually isolated from private networks and management network. A public/corporate network is a single class C network from the cloud owner's public network range (for public clouds it is globally routed).

The private network is a network segment connected to all the compute nodes; all the bridges on the compute nodes are connected to this network. This is where instances exchange their fixed IP traffic. If VlanManager is in use, this network is further segmented into isolated VLANs, one per project existing in the cloud. Each VLAN contains an IP network dedicated to this project and connects virtual instances that belong to this project. If a FlatDHCP scheme is used, instances from different projects all share the same VLAN and IP space.

The management network connects all the cluster nodes and is used to exchange internal data between components of the OpenStack cluster. This network must be isolated from private and public networks for security reasons. The management network can also be used to serve the iSCSI protocol exchange between the compute and volume nodes if the traffic is not intensive. This network is a single class C network from a private IP address range (not globally routed).

The iSCSI network is not required unless your workload involves heavy processing on persistent block storage. In this case, we recommend iSCSI on dedicated wiring to keep it from interfering with management traffic and to potentially introduce some iSCSI optimizations like jumbo frames, queue lengths on interfaces, etc.

Openstack daemons vs. networks

In high availability mode, all the OpenStack central components need to be put behind a load balancer. For this purpose, you can use dedicated hardware or an endpoint node. An endpoint node runs high-availability/load-balancing software and hides a farm of OpenStack daemons behind a single IP. The following table shows the placement of services on different networks under the load balancer:

| OpenStack component | Host placement | Network placement | Remarks |

|---|---|---|---|

nova-api, glance-api,glance-registry,keystone-api,Horizon | controller/compute | public network | Since these services are accessed directly by users (API endpoints), it is logical to put them on the public net. |

nova-scheduler | controller/compute | mgmt network | |

nova-compute | compute | mgmt network | |

nova-network | controller/compute | mgmt network | |

| MySQL | controller | mgmt network | Including replication/HA traffic |

| RabbitMQ | controller | mgmt network | Including rabbitMQ cluster traffic |

nova-volume | volume | mgmt network | |

| iSCSI | volume | mgmt network (dedicated VLAN) or separate iSCSI network | In case of high block storage traffic, you should use a dedicated network. |

Conclusion

OpenStack deployments can be organized in many ways, thus ensuring scalability and availability. This fact is not obvious from the online documentation (at least it wasn't for me) and in fact I've seen a couple of deployments where sysadmins were sure they needed a central controller node. This isn't true, and in fact it is possible to have an installation where there is no controller node at all and the database and messaging server are hosted by an external entity.

When architectures are distributed, you need to properly spread traffic across many instances of a service and also provide replication of stateful resources (like MySQL and RabbitMQ). The OpenStack folks haven't provided any documentation on this so far, so Mirantis has been trying to fill this gap by producing a series of posts on scaling platform and API services.

Links

- Rackspace Open Cloud Reference Architecture

- OpenStack Documentation / Compute Administration Manual: Example Installation Architectures

- Ken Pepple: OpenStack Nova Architecture

- OpenStack Wiki: Real Deployments

- OpenStack Documentation: Compute and Image System Requirements

- OpenStack Documentation / Compute Administration Manual: Service Architecture